Installation Guide for

RAC 11.2.0.1 on VirtualBox(4.2.0)

List of Topics (Linux, Database, RAC, EBS)

Click Here for installing RAC in VM-Ware using Openfiler Software

(Prepare SAN Storage area using Openfiler software and discovery your SAN Storage in VMWARE and rest of the steps are same as follows in this blog)

List of Topics (Linux, Database, RAC, EBS)

Click Here for installing RAC in VM-Ware using Openfiler Software

(Prepare SAN Storage area using Openfiler software and discovery your SAN Storage in VMWARE and rest of the steps are same as follows in this blog)

I have provided the links for complete installations in following Links:

- Openfiler Installation and configuration,

- Linux Installtion

- Grid Installation

- Database Installation

- Oracle Database Documentation >> Installing and Upgrading >> Linux Installation Guides >> Grid Infrastructure Installation Guide

- Certification Information for Oracle Database on Linux x86-64 (Doc ID 1304727.2)

1.PuTTY 2.WinSCP 3. VNC Viewer

================================================================================================================

Download PuTTY: latest release

putty.exe (the SSH and Telnet client itself)

Download link Putty 64bit Download link for putty 32 bit

================================================================================================================

Installation of RAC database on 2 node using

virtual box with 2gb of ram on each nodes

·

If you are using Virtual box for first time then

Enable Intel

Virtualization Technology (intel VT) (VTx/VTd) in your BIOS

settings.

·

Install Virtual Box Software Version 4.2.0 (key

not required)

·

Install VNC viewer 5.3.0 (Key: N7N4B-LBJ3Q-J4AYM-BB5MD-X8RYA )

Host

Machine Details:

|

Guest

Machine Details:

|

Grid

& Database Version

|

OS : Win7

RAM in PC: 8gb

Bit Version : 64bit

|

OS : OEL-5.3

RAM : 2gb

Bit Version : 32bit

|

Grid Version:11.2.0.1

Database: 11.2.0.1

|

Machine 1

|

Machine 2

|

Shareable Disk

|

Name: RAC1

Hard Disk 120GB

Disk Type : Dynamically Size

Network Adapter : 2

|

Name: RAC2

Hard Disk 120GB

Disk Type : Dynamically Size

Network Adapter : 2

|

Disk Name : San Storage

Disk Size : 20GB

Disk Type : Fixed Size

|

Node

|

Public IP

|

Private IP

|

Database

|

Users

|

SID

|

Cluster

|

RAC1

|

192.168.1.11

|

10.0.0.11

|

DELL

|

Oracle,grid

|

DELL1, +ASM1

|

dellc

|

RAC2

|

192.168.1.12

|

10.0.0.12

|

DELL

|

Oracle,grid

|

DELL2, +ASM2

|

dellc

|

RAC3

|

192.168.1.13

|

10.0.0.13

|

DELL

|

Oracle,grid

|

DELL3,+ASM3

|

dellc

|

SAN for VMware

|

192.168.1.40

|

root

|

UUID ERROR VirtualBox

Open Command prompt

cd C:\Program

Files\Oracle\VirtualBox\

VBOXMANAGE.EXE

internalcommands sethduuid " D:\RAC Installations\RAC1\RAC1.vdi"

Hints Before

Installing Linux:

Sharable Disk :

hints (Do not attach the shareable disk while

installing “LINUX”)

Hint 1 : just install Linux OS in

RAC1, then copy and unzip GRID & ORACLE S/W

Hint 2 : After installation,

Copying, Unzipping completed in RAC1 then shut down the machine

Hint 3 : Now Install Linux on RAC2

and after installation shut down the machine

Hint 4 : Create a new disk on RAC1

for shareable (san1) with option (fixed size)

Hint 5 : Make it shareable by

changing it from --->file--->virtual Media Manager

Hint 6 : For machine2 (RAC2) just

attach Shareable disk(san1) and start machines (RAC1, RAC2) and verify (fdisk

-l).

Hint 7 : Both the machines should

have to mount to the attached disk

Hint 8 : By formatting (fdisk

/dev/sdb) it at node the status of disk will automatically change in node 2.

you can verify by fdisk -l

Hint 9 :

After completing above steps check for network connectivity with ping command

for both Public and Private IP

Enable Virtual Technology

Configuration of IP address in Windows:

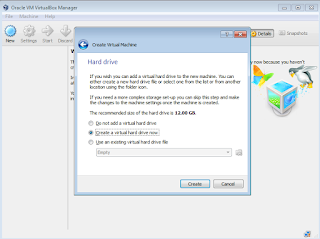

Creation of Machines RAC1 and RAC2

#--- Preparation of Machine RAC1

Note: just to complete installation fast allocate 4gb. After

installation you can reduce its capacity to 2 gb

#---Choose Your Linux ISO File

#--- Disable USB and Audio --- and OK

#--- Make the same setup for RAC2

change settings as shown in RAC1

Creation of Machines RAC1 and RAC2 Completed

Installation of Linux In RAC1

#--- Here

Provide the IP for RAC1 in Sequence 192.168.1.11

which is your Public IP and 10.0.0.11 is

your Private IP

#--- For Machine (RAC2) Provide the IP for RAC1 in Sequence 192.168.1.12 which is your Public IP and 10.0.0.12 is your Private IP

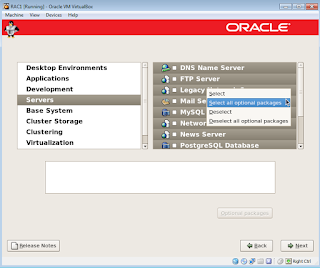

#--- Ctrl+Aà

Right Click à

Select all optional packages

#--- Do the same for all

·

Desktop Environments

·

Applications

·

Development

·

Servers

·

Base System

·

Cluster Storage

·

Clustering

·

Virtualization

Linux OS Installation with Reduced Set of Packages for Running Oracle Database Server (Doc ID 728346.1)

Defining a "default RPMs" installation of the Oracle Linux (OL) OS (Doc ID 401167.1)

The Oracle Validated RPM Package for Installation Prerequisites (Doc ID 437743.1)

12c, Release 1 (12.1) Grid Infrastructure Installation Guide

#--- Now Check the connectivity using PuTTY and WinSCP 192.168.1.11

#--- Using Winscp Copy the RPMS,

Grid, Oracle Software to location /shrf

so that we can share this folder on other machines too using NFS service

#--- Unzip the Grid and Oracle Software using Root User because the RAM is set for 4GB so that

it will perform it faster

#--- then shut down the machine using init 0

#--- Change RAM from 4gb to 2gb

#--- Now do the same above steps for RAC2 for

installing Linux and Provide Network details as follows

·

Public IP (eth0) :

192.168.1.12

·

Private IP (eth1) : 10.0.0.12

·

Hostname : rac2.dell.com

·

Gateway : 192.168.1.2

RAC2- Network

Parameters

#--- then shut down the machine (RAC2) using init 0

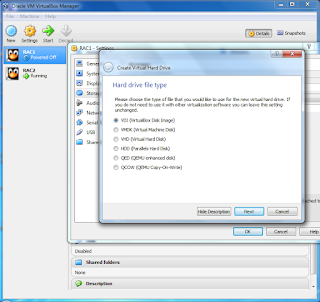

#--- Now

create a new disk in RAC1 with "Fixed size" and make it ”Shareable” from àFileàVirtual Media Manager and then attach it to RAC2

Creation of SAN Storage

-->Open,

--> OK

#---Reduce

the RAM of Two Machines (RAC1, RAC2) to 2GB

Setting up Host file:

RAC - 1

|

RAC - 2

|

# login as: root

root@192.168.1.11's password:

Last login: Tue May 10 09:30:35 2016

[root@rac1 ~]# hostname

rac1.dell.com

[root@rac1 ~]# hostname -i

192.168.1.11

[root@rac1 ~]# vi

/etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

##-- Public-IP

192.168.1.11 rac1.dell.com rac1

192.168.1.12 rac2.dell.com rac2

192.168.1.13 rac3.dell.com rac3

##-- Private-IP

10.0.0.11 rac1-priv.dell.com rac1-priv

10.0.0.12 rac2-priv.dell.com rac2-priv

10.0.0.13 rac3-priv.dell.com rac3-priv

##-- Virtual-IP

192.168.1.21 rac1-vip.dell.com rac1-vip

192.168.1.22 rac2-vip.dell.com rac2-vip

192.168.1.23 rac3-vip.dell.com rac3-vip

##-- SCAN IP

192.168.1.30 dellc-scan.dell.com dellc-scan

192.168.1.31 dellc-scan.dell.com dellc-scan

192.168.1.32 dellc-scan.dell.com dellc-scan

##-- Storage-IP

192.168.1.40 san.dell.com san

[root@rac1 ~]#

[root@rac1 ~]# service

network restart

Shutting down interface

eth0:

[ OK

]

Shutting down interface

eth1:

[

OK ]

Shutting down loopback

interface:

[ OK

]

Bringing up loopback

interface:

[ OK

]

Bringing up interface

eth0:

[ OK

]

Bringing up interface

eth1:

[

OK ]

[root@rac1 ~]#

|

login as: root

root@192.168.1.12's password:

Last login: Tue May 10 10:44:19 2016

[root@rac2 ~]# hostname

rac2.dell.com

[root@rac2 ~]# hostname -i

192.168.1.12

[root@rac2 ~]# vi

/etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

##-- Public-IP

192.168.1.11 rac1.dell.com rac1

192.168.1.12 rac2.dell.com rac2

192.168.1.13 rac3.dell.com rac3

##-- Private-IP

10.0.0.11 rac1-priv.dell.com rac1-priv

10.0.0.12 rac2-priv.dell.com rac2-priv

10.0.0.13 rac3-priv.dell.com rac3-priv

##-- Virtual-IP

192.168.1.21 rac1-vip.dell.com rac1-vip

192.168.1.22 rac2-vip.dell.com rac2-vip

192.168.1.23 rac3-vip.dell.com rac3-vip

##-- SCAN IP

192.168.1.30 dellc-scan.dell.com dellc-scan

192.168.1.31 dellc-scan.dell.com dellc-scan

192.168.1.32 dellc-scan.dell.com dellc-scan

##-- Storage-IP

192.168.1.40 san.dell.com san

[root@rac2 ~]

[root@rac2 ~]# service network restart

Shutting down interface

eth0:

[ OK

]

Shutting down interface

eth1:

[

OK ]

Shutting down loopback

interface:

[ OK

]

Bringing up loopback

interface:

[ OK

]

Bringing up interface

eth0:

[ OK

]

Bringing up interface eth1:

[

OK ]

[root@rac2 ~]#

|

RAC - 1

|

RAC - 2

|

[root@rac1 ~]# nslookup dellc-scan [root@rac1 ~]# ping 192.168.1.11

PING 192.168.1.11 (192.168.1.11) 56(84) bytes of data.

64 bytes from 192.168.1.11: icmp_seq=1 ttl=64 time=0.041

ms

64 bytes from 192.168.1.11: icmp_seq=2 ttl=64 time=0.171

ms

64 bytes from 192.168.1.11: icmp_seq=3 ttl=64 time=0.055

ms

--- 192.168.1.11 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time

2071ms

rtt min/avg/max/mdev = 0.041/0.089/0.171/0.058 ms

[root@rac1 ~]#

[root@rac1 ~]# ping 192.168.1.12

PING 192.168.1.12 (192.168.1.12) 56(84) bytes of data.

64 bytes from 192.168.1.12: icmp_seq=1 ttl=64 time=3.03 ms

64 bytes from 192.168.1.12: icmp_seq=2 ttl=64 time=0.913

ms

64 bytes from 192.168.1.12: icmp_seq=3 ttl=64 time=0.880

ms

--- 192.168.1.12 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time

2000ms

rtt min/avg/max/mdev = 0.880/1.608/3.032/1.007 ms

[root@rac1 ~]#

[root@rac1 ~]# ping 10.0.0.11

PING 10.0.0.11 (10.0.0.11) 56(84) bytes of data.

64 bytes from 10.0.0.11: icmp_seq=1 ttl=64 time=0.121 ms

64 bytes from 10.0.0.11: icmp_seq=2 ttl=64 time=0.289 ms

64 bytes from 10.0.0.11: icmp_seq=3 ttl=64 time=0.323 ms

--- 10.0.0.11 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time

2001ms

rtt min/avg/max/mdev = 0.121/0.244/0.323/0.089 ms

[root@rac1 ~]#

[root@rac1 ~]# ping 10.0.0.12

PING 10.0.0.12 (10.0.0.12) 56(84) bytes of data.

64 bytes from 10.0.0.12: icmp_seq=1 ttl=64 time=3.12 ms

64 bytes from 10.0.0.12: icmp_seq=2 ttl=64 time=0.550 ms

64 bytes from 10.0.0.12: icmp_seq=3 ttl=64 time=1.40 ms

--- 10.0.0.12 ping statistics ---

3 packets transmitted, 4 received, 0% packet loss, time

3000ms

rtt min/avg/max/mdev = 0.550/1.532/3.120/0.965 ms

[root@rac1 ~]#

[root@rac1 ~]# ping 192.168.1.40

PING 192.168.1.40 (192.168.1.40) 56(84) bytes of data.

64 bytes from 192.168.1.40: icmp_seq=1 ttl=64 time=0.340

ms

64 bytes from 192.168.1.40: icmp_seq=2 ttl=64 time=0.278

ms

64 bytes from 192.168.1.40: icmp_seq=3 ttl=64 time=0.276

ms

--- 192.168.1.40 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time

2999ms

rtt min/avg/max/mdev = 0.245/0.284/0.340/0.040 ms

[root@rac1 ~]#

|

[root@rac2 ~]# ping 192.168.1.12

PING 192.168.1.12 (192.168.1.12) 56(84) bytes of data.

64 bytes from 192.168.1.12: icmp_seq=1 ttl=64 time=0.043

ms

64 bytes from 192.168.1.12: icmp_seq=2 ttl=64 time=0.144

ms

64 bytes from 192.168.1.12: icmp_seq=3 ttl=64 time=0.176

ms

--- 192.168.1.12 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time

2001ms

rtt min/avg/max/mdev = 0.043/0.121/0.176/0.056 ms

[root@rac2 ~]#

[root@rac2 ~]# ping 192.168.1.11

PING 192.168.1.11 (192.168.1.11) 56(84) bytes of data.

64 bytes from 192.168.1.11: icmp_seq=1 ttl=64 time=0.835

ms

64 bytes from 192.168.1.11: icmp_seq=2 ttl=64 time=0.898

ms

64 bytes from 192.168.1.11: icmp_seq=3 ttl=64 time=0.881

ms

--- 192.168.1.11 ping statistics ---

3 packets transmitted, 4 received, 0% packet loss, time

3001ms

rtt min/avg/max/mdev = 0.835/0.875/0.898/0.043 ms

[root@rac2 ~]#

[root@rac2 ~]# ping 10.0.0.11

PING 10.0.0.11 (10.0.0.11) 56(84) bytes of data.

64 bytes from 10.0.0.11: icmp_seq=1 ttl=64 time=0.993 ms

64 bytes from 10.0.0.11: icmp_seq=2 ttl=64 time=0.937 ms

64 bytes from 10.0.0.11: icmp_seq=3 ttl=64 time=1.18 ms

--- 10.0.0.11 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time

2000ms

rtt min/avg/max/mdev = 0.937/1.038/1.185/0.109 ms

[root@rac2 ~]#

[root@rac2 ~]# ping 10.0.0.12

PING 10.0.0.12 (10.0.0.12) 56(84) bytes of data.

64 bytes from 10.0.0.12: icmp_seq=1 ttl=64 time=0.213 ms

64 bytes from 10.0.0.12: icmp_seq=2 ttl=64 time=0.057 ms

64 bytes from 10.0.0.12: icmp_seq=3 ttl=64 time=0.128 ms

--- 10.0.0.12 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time

2000ms

rtt min/avg/max/mdev = 0.057/0.132/0.213/0.065 ms

[root@rac2 ~]#

[root@rac2 ~]# ping 192.168.1.40

PING 192.168.1.40 (192.168.1.40) 56(84) bytes of data.

64 bytes from 192.168.1.40: icmp_seq=1 ttl=64 time=0.282

ms

64 bytes from 192.168.1.40: icmp_seq=2 ttl=64 time=0.232 ms

64 bytes from 192.168.1.40: icmp_seq=3 ttl=64 time=0.273

ms

--- 192.168.1.40 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time

2999ms

rtt min/avg/max/mdev = 0.232/0.350/0.616/0.155 ms

[root@rac2 ~]#

|

Configuration of Shared Storage on both NODES for Openfiler Software:

Node-1 Machine: RAC1

login as: root

root@192.168.1.11's password:

Last login: Mon Feb 20 14:45:28 2017

[root@rac1 ~]# hostname

rac1.dell.com

[root@rac1 ~]#

[root@rac1 ~]# hostname -i

192.168.1.11

[root@rac1 ~]#

[root@rac1 ~]# ifconfig

eth0 Link encap:Ethernet

HWaddr 00:0C:29:FB:3F:8D

inet addr:192.168.1.11

Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fefb:3f8d/64 Scope:Link

UP

BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX

packets:281 errors:0 dropped:0 overruns:0 frame:0

TX

packets:163 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX

bytes:47348 (46.2 KiB) TX bytes:25000 (24.4 KiB)

eth1 Link encap:Ethernet

HWaddr 00:0C:29:FB:3F:97

inet addr:10.0.0.11

Bcast:10.255.255.255 Mask:255.0.0.0

inet6 addr: fe80::20c:29ff:fefb:3f97/64 Scope:Link

UP

BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX

packets:214 errors:0 dropped:0 overruns:0 frame:0

TX

packets:86 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX

bytes:36007 (35.1 KiB) TX bytes:17656 (17.2 KiB)

lo Link

encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP

LOOPBACK RUNNING MTU:16436 Metric:1

RX

packets:3758 errors:0 dropped:0 overruns:0 frame:0

TX

packets:3758 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX

bytes:8380416 (7.9 MiB) TX bytes:8380416 (7.9 MiB)

virbr0 Link encap:Ethernet HWaddr

EA:A2:66:7A:21:4C

inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0

UP

BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX

packets:0 errors:0 dropped:0 overruns:0 frame:0

TX

packets:20 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX

bytes:0 (0.0 b) TX bytes:3647 (3.5 KiB)

[root@rac1 ~]# fdisk -l

Disk /dev/sda: 1073.7 GB, 1073741824000 bytes

255 heads, 63 sectors/track, 130541 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot

Start

End Blocks Id System

/dev/sda1

*

1 38245 307202931

83 Linux

/dev/sda2

38246 41432

25599577+ 82 Linux swap / Solaris

/dev/sda3

41433 43982

20482875 83 Linux

/dev/sda4

43983 130541 695285167+

5 Extended

/dev/sda5

43983 130541 695285136

83 Linux

[root@rac1 ~]#

[root@rac1 ~]#

Your Disk is Not Available. So, Discover your IQN

[root@rac1 ~]# iscsiadm -m discovery -t

st -p 192.168.1.40

192.168.1.40:3260,1

iqn.2006-01.com.openfiler:tsn.5debe1b1c4b0

[root@rac1 ~]#

[root@rac1 ~]# service iscsi restart

iscsiadm: No matching sessions found

Stopping iSCSI daemon:

iscsid is

stopped

[ OK

]

Starting iSCSI daemon: FATAL: Error inserting cxgb3i

(/lib/modules/2.6.32-300.10.1.el5uek/kernel/drivers/scsi/cxgbi/cxgb3i/cxgb3i.ko):

Unknown symbol in module, or unknown parameter (see dmesg)

FATAL: Error inserting ib_iser

(/lib/modules/2.6.32-300.10.1.el5uek/kernel/drivers/infiniband/ulp/iser/ib_iser.ko):

Unknown symbol in module, or unknown parameter (see dmesg)

[ OK

]

[ OK

]

Setting up iSCSI targets: Logging in to [iface: default,

target: iqn.2006-01.com.openfiler:tsn.5debe1b1c4b0, portal:

192.168.1.40,3260] (multiple)

Login to [iface: default, target:

iqn.2006-01.com.openfiler:tsn.5debe1b1c4b0, portal: 192.168.1.40,3260]

successful.

[ OK

]

[root@rac1 ~]# chkconfig iscsi on

[root@rac1 ~]#

[root@rac1 ~]# chkconfig --list | grep iscsi

iscsi

0:off 1:off

2:on 3:on 4:on 5:on

6:off

iscsid

0:off 1:off 2:off

3:on 4:on 5:on 6:off

[root@rac1 ~]#

[root@rac1 ~]# fdisk -l[root@rac1 ~]# cat /etc/inittab [root@rac1 ~]# [root@rac1 ~]# who -r run-level 5 2018-01-30 07:57 last=S [root@rac1 ~]#

Disk /dev/sda: 1073.7 GB, 1073741824000 bytes

255 heads, 63 sectors/track, 130541 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot

Start

End Blocks Id System

/dev/sda1

*

1 38245 307202931

83 Linux

/dev/sda2

38246 41432

25599577+ 82 Linux swap / Solaris

/dev/sda3

41433 43982

20482875 83 Linux

/dev/sda4

43983 130541 695285167+

5 Extended

/dev/sda5

43983 130541 695285136

83 Linux

Disk /dev/sdb: 102.3 GB, 102374572032 bytes

255 heads, 63 sectors/track, 12446 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Disk /dev/sdb doesn't contain

a valid partition table

[root@rac1 ~]#

|

Node-2 Machine: RAC2

login as: root

root@192.168.1.12's password:

Last login: Mon Feb 20 14:47:45 2017

[root@rac2 ~]#

[root@rac2 ~]#

[root@rac2 ~]# hostname

rac2.dell.com

[root@rac2 ~]# hostname -i

192.168.1.12

[root@rac2 ~]#

[root@rac2 ~]# ifconfig

eth0 Link encap:Ethernet

HWaddr 00:0C:29:36:9C:16

inet addr:192.168.1.12

Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe36:9c16/64 Scope:Link

UP

BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX

packets:200 errors:0 dropped:0 overruns:0 frame:0

TX

packets:151 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX

bytes:39700 (38.7 KiB) TX bytes:26672 (26.0 KiB)

eth1 Link encap:Ethernet

HWaddr 00:0C:29:36:9C:20

inet addr:10.0.0.12

Bcast:10.255.255.255 Mask:255.0.0.0

inet6 addr: fe80::20c:29ff:fe36:9c20/64 Scope:Link

UP

BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX

packets:216 errors:0 dropped:0 overruns:0 frame:0

TX

packets:95 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX

bytes:37580 (36.6 KiB) TX bytes:19182 (18.7 KiB)

lo Link

encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP

LOOPBACK RUNNING MTU:16436 Metric:1

RX

packets:4374 errors:0 dropped:0 overruns:0 frame:0

TX

packets:4374 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX

bytes:9177312 (8.7 MiB) TX bytes:9177312 (8.7 MiB)

virbr0 Link encap:Ethernet HWaddr

0E:D4:79:B6:C8:15

inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0

UP

BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX

packets:0 errors:0 dropped:0 overruns:0 frame:0

TX

packets:27 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX

bytes:0 (0.0 b) TX bytes:4405 (4.3 KiB)

[root@rac2 ~]# fdisk -l

Disk /dev/sda: 1073.7 GB, 1073741824000 bytes

255 heads, 63 sectors/track, 130541 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot

Start

End Blocks Id System

/dev/sda1

*

1 38245 307202931

83 Linux

/dev/sda2

38246 41432

25599577+ 82 Linux swap / Solaris

/dev/sda3

41433 43982

20482875 83 Linux

/dev/sda4

43983 130541 695285167+

5 Extended

/dev/sda5

43983 130541 695285136

83 Linux

[root@rac2 ~]#

[root@rac2 ~]#

Your Disk is Not Available. So, Discover your IQN

[root@rac2 ~]# iscsiadm -m discovery -t

st -p 192.168.1.40

192.168.1.40:3260,1

iqn.2006-01.com.openfiler:tsn.5debe1b1c4b0

[root@rac2 ~]#

[root@rac2 ~]# service iscsi restart

iscsiadm: No matching sessions found

Stopping iSCSI daemon:

iscsid is

stopped

[ OK

]

Starting iSCSI daemon: FATAL: Error inserting cxgb3i

(/lib/modules/2.6.32-300.10.1.el5uek/kernel/drivers/scsi/cxgbi/cxgb3i/cxgb3i.ko):

Unknown symbol in module, or unknown parameter (see dmesg)

FATAL: Error inserting ib_iser

(/lib/modules/2.6.32-300.10.1.el5uek/kernel/drivers/infiniband/ulp/iser/ib_iser.ko):

Unknown symbol in module, or unknown parameter (see dmesg)

[ OK ]

[ OK ]

Setting up iSCSI targets: Logging in to [iface: default,

target: iqn.2006-01.com.openfiler:tsn.5debe1b1c4b0, portal:

192.168.1.40,3260] (multiple)

Login to [iface: default, target:

iqn.2006-01.com.openfiler:tsn.5debe1b1c4b0, portal: 192.168.1.40,3260]

successful.

[ OK

]

[root@rac2 ~]# chkconfig iscsi on

[root@rac2 ~]#

[root@rac2 ~]# chkconfig --list | grep iscsi

iscsi 0:off 1:off 2:on 3:on 4:on 5:on 6:off

iscsid 0:off 1:off 2:off 3:on 4:on 5:on 6:off

[root@rac2 ~]#

[root@rac2 ~]# fdisk -l

Disk /dev/sda: 1073.7 GB, 1073741824000 bytes

255 heads, 63 sectors/track, 130541 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot

Start

End Blocks Id System

/dev/sda1

*

1 38245 307202931

83 Linux

/dev/sda2

38246 41432

25599577+ 82 Linux swap / Solaris

/dev/sda3

41433 43982

20482875 83 Linux

/dev/sda4

43983 130541 695285167+

5 Extended

/dev/sda5

43983 130541 695285136

83 Linux

Disk /dev/sdb: 102.3 GB, 102374572032 bytes

255 heads, 63 sectors/track, 12446 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Disk /dev/sdb doesn't contain

a valid partition table

[root@rac2 ~]#

|

RAC - 1

|

RAC - 2

|

[root@rac1 ~]# fdisk /dev/sdb

Device contains neither a valid DOS partition table, nor

Sun, SGI or OSF disklabel

Building a new DOS disklabel. Changes will remain in

memory only,

until you decide to write them. After that, of course, the

previous

content won't be recoverable.

The number of cylinders for this disk is set to 2610.

There is nothing wrong with that, but this is larger than

1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of

LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Warning: invalid flag 0x0000 of partition table 4 will be

corrected by w(rite)

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-2610, default 1):

Using default value 1

Last cylinder or +size or +sizeM or +sizeK (1-2610,

default 2610):

Using default value 2610

Command (m for help): p

Disk /dev/sdb: 102.3 GB, 102374572032 bytes 255 heads, 63 sectors/track, 12446 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sdb1 1 12446 99972463+ 83 Linux Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@rac1 ~]#

|

|

Sample output for

both RAC-1 and RAC-2

[root@rac2 ~]# fdisk –l

Disk /dev/sda: 1073.7 GB, 1073741824000 bytes

255 heads, 63 sectors/track, 130541 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot

Start

End Blocks Id System

/dev/sda1

*

1 25496 204796588+

83 Linux

/dev/sda2

25497 29320

30716280 82 Linux swap / Solaris

/dev/sda3

29321 33144 30716280

83 Linux

/dev/sda4

33145 130541 782341402+

5 Extended

/dev/sda5

33145 130541 782341371

83 Linux

Disk /dev/sdb: 102.3 GB, 102374572032 bytes

255 heads, 63 sectors/track, 12446 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device

Boot

Start

End Blocks Id System

/dev/sdb1

1 12446 99972463+

83 Linux

[root@rac1 ~]#

|

|

Grid RPM Installation on both Nodes:

RAC - 1

|

RAC - 2

|

[root@rac1 rpms]# su

cd /u01/sftwr/grid/rpm

[root@rac1 ~]# export

CVUQDISK_GRP=oinstall

[root@rac1 ~]#

[root@rac1 D]# echo $CVUQDISK

[root@rac1 D]# rpm -ivh

cvuqdisk-1.0.9-1.rpm

scp cvuqdisk* root@rac2:/u01 yes |

[root@rac2 rpms]# su

cd /u01 rpm -qa cvuqdisk*

rpm -ivh

cvuqdisk*

pwd |

Sample output for

RAC-1 and RAC2

[root@rac1 G]# rpm

-ivh cvuqdisk-1.0.7-1.rpm

Preparing...

########################################### [100%]

Using default group oinstall to install package

1:cvuqdisk

########################################### [100%]

[root@rac1 G]#

|

|

Deleting Recreating with Users with new Password:

RAC - 1

|

RAC - 2

|

[root@rac1 rpms]# su

userdel oracle

userdel grid

groupdel oinstall

groupdel dba

groupdel asmdba

groupdel asmadmin

groupdel asmoper

rm -rf /var/mail/oracle

rm -rf /home/oracle/

rm -rf /var/mail/grid

rm -rf /home/grid

groupadd -g 1000 oinstall

groupadd -g 1001 dba

groupadd -g 1002 asmdba

groupadd -g 1003

asmadmin

groupadd -g 1004 asmoper

useradd -u 1100 -g oinstall -G dba,asmdba,asmadmin oracle

useradd -u 1101 -g

oinstall -G dba,asmdba,asmadmin,asmoper grid

passwd grid

Changing password for user grid.

New UNIX password: grid

BAD PASSWORD: it is too short

Retype new UNIX password: grid

passwd: all authentication tokens updated successfully.

[root@rac1 rpms]# passwd

oracle

Changing password for user oracle.

New UNIX password: oracle

BAD PASSWORD: it is based on a dictionary word

Retype new UNIX password: oracle

passwd: all authentication tokens updated successfully.

[root@rac1 ~]#

[root@rac1 ~]# su - oracle

[oracle@rac1 ~]$ id

uid=1100(oracle) gid=1000(oinstall)

groups=1000(oinstall),1001(dba),1002(asmdba),1003(asmadmin)

[oracle@rac1 ~]$

[oracle@rac1 ~]$ exit

logout

[root@rac1 ~]# su - grid

[grid@rac1 ~]$ id

uid=1101(grid) gid=1000(oinstall)

groups=1000(oinstall),1001(dba),1002(asmdba),1003(asmadmin),1004(asmoper)

[grid@rac1 ~]$ [grid@rac1 ~]$ exit logout [root@rac1 ~]# |

[root@rac2 rpms]# su

userdel oracle

userdel grid

groupdel oinstall

groupdel dba

groupdel asmdba

groupdel asmadmin

groupdel asmoper

rm -rf /var/mail/oracle

rm -rf /home/oracle/

rm -rf /var/mail/grid

rm -rf /home/grid

groupadd -g 1000 oinstall

groupadd -g 1001 dba

groupadd -g 1002 asmdba

groupadd -g 1003

asmadmin

groupadd -g 1004 asmoper

useradd -u 1100 -g oinstall -G dba,asmdba,asmadmin oracle

useradd -u 1101 -g

oinstall -G dba,asmdba,asmadmin,asmoper grid

passwd grid

Changing password for user grid.

New UNIX password:grid

BAD PASSWORD: it is too short

Retype new UNIX password: grid

passwd: all authentication tokens updated successfully.

[root@rac2 rpms]# passwd

oracle

Changing password for user oracle.

New UNIX password: oracle

BAD PASSWORD: it is based on a dictionary word

Retype new UNIX password:oracle

passwd: all authentication tokens updated successfully.

[root@rac2 rpms]#

[root@rac2 ~]# su - oracle

[oracle@rac2 ~]$ id

uid=1100(oracle) gid=1000(oinstall)

groups=1000(oinstall),1001(dba),1002(asmdba),1003(asmadmin)

[oracle@rac2 ~]$

[oracle@rac2 ~]$ exit

logout

[root@rac2 ~]# su - grid

[grid@rac2 ~]$ id

uid=1101(grid) gid=1000(oinstall)

groups=1000(oinstall),1001(dba),1002(asmdba),1003(asmadmin),1004(asmoper)

[grid@rac2 ~]$ [grid@rac2 ~]$ exit logout [root@rac2 ~]# |

Setting Directory, Permissions:

RAC - 1

|

RAC - 2

|

[root@rac1 rpms]# su

mkdir -p /u01/app/grid

mkdir -p

/u01/app/grid_home

mkdir -p /u01/app/oracle

chown -R grid:oinstall

/u01/

chown -R oracle:oinstall

/u01/app/oracle

chmod -R 775 /u01

pwd |

[root@rac2 rpms]# su

mkdir -p /u01/app/grid

mkdir -p

/u01/app/grid_home

mkdir -p /u01/app/oracle

chown -R grid:oinstall

/u01/

chown -R oracle:oinstall

/u01/app/oracle

chmod -R 775 /u01

pwd |

Setting limits.conf:

RAC - 1

|

RAC - 2

|

[root@rac1

rpms]#

cp /etc/security/limits.conf /etc/security/limits.conf_bkp vi /etc/security/limits.conf

G$

o

#---Paste the below values at bottom of file

grid soft nofile 131072

grid hard nofile 131072

grid soft nproc 131072

grid hard nproc 131072

grid soft core unlimited

grid hard core unlimited

grid soft memlock 3500000

grid hard memlock 3500000

grid hard stack 32768

# Recommended stack hard limit 32MB for grid installations oracle soft nofile 131072 oracle hard nofile 131072 oracle soft nproc 131072 oracle hard nproc 131072 oracle soft core unlimited oracle hard core unlimited oracle soft memlock unlimited oracle hard memlock unlimited oracle soft stack 10240 oracle hard stack 32768

|

[root@rac2

rpms]#

cp /etc/security/limits.conf /etc/security/limits.conf_bkp vi /etc/security/limits.conf

G$

o

#---Paste the below values at bottom of file

grid soft nofile 131072

grid hard nofile 131072

grid soft nproc 131072

grid hard nproc 131072

grid soft core unlimited

grid hard core unlimited

grid soft memlock 3500000

grid hard memlock 3500000

grid hard stack 32768

# Recommended stack hard limit 32MB for grid installations oracle soft nofile 131072 oracle hard nofile 131072 oracle soft nproc 131072 oracle hard nproc 131072 oracle soft core unlimited oracle hard core unlimited oracle soft memlock unlimited oracle hard memlock unlimited oracle soft stack 10240 oracle hard stack 32768 |

Save and

Exit (:wq)

Setting pam.d file for both nodes:

RAC - 1

|

RAC - 2

|

[root@rac1

rpms]#

vi /etc/pam.d/login

G$

o

#---add the below in last line

session required /lib/security/pam_limits.so

|

[root@rac2

rpms]#

vi /etc/pam.d/login

G$

o

#---add the below in last line

session required /lib/security/pam_limits.so

|

Save and Exit (:wq)

# for linux 7 check semaphore parameters in both the nodes rac1,2 and set as follow: [root@rac1 ~]# cat /proc/sys/kernel/sem 32000 1024000000 500 32000 [root@rac1 ~]# ipc -ls ------ Semaphore Limits -------- max number of arrays = 32000 max semaphores per array = 32000 max semaphores system wide = 1024000000 max ops per semop call = 500 semaphore max value = 32767 [root@rac1 ~]# # To make the change permanent, add or change the following line in the file /etc/sysctl.conf. This file is used during the boot process. [root@rac1 ~]# [root@rac1 ~]# echo "kernel.sem=250 32000 100 128" >> /etc/sysctl.conf [root@rac1 ~]# echo 250 32000 100 128 > /proc/sys/kernel/sem [root@rac1 ~]# sysctl -p [root@rac1 ~]# cat /proc/sys/kernel/sem 250 32000 100 128 [root@rac1 ~]# ipc -ls ------ Semaphore Limits -------- max number of arrays = 128 max semaphores per array = 250 max semaphores system wide = 32000 max ops per semop call = 100 semaphore max value = 32767 [root@rac1 ~]# grep kernel.sem /etc/sysctl.conf kernel.sem = 250 32000 100 128 [root@rac1 ~]# |

RAC - 1

|

RAC - 2

|

# This step can be also performed using script sshUserSetup.sh as show below: root@rac1 rpms]#

su - grid

pwd

cd /home/grid

pwd

mkdir .ssh

vi .ssh/config

iHost *

ForwardX11 no

|

[root@rac2 rpms]#

su - grid

pwd

cd /home/grid

pwd

mkdir .ssh

vi .ssh/config

iHost *

ForwardX11 no

|

Save and Exit (:wq)

Creation of .bashrc for GRID User:

RAC - 1

|

RAC - 2

|

[grid@rac1 ~]$

vi /home/grid/.bashrc

20ddi# .bashrc

# User specific

aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global

definitions

if [ -f /etc/bashrc ];

then

. /etc/bashrc

fi

if [ -t 0 ]; then

stty intr ^C

fi

|

[grid@rac2 ~]$

vi /home/grid/.bashrc

20ddi# .bashrc

# User specific

aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global

definitions

if [ -f /etc/bashrc ];

then

. /etc/bashrc

fi

if [ -t 0 ]; then

stty intr ^C

fi

|

Save and Exit (:wq)

Creation of .ssh, config for ORACLE User:

RAC - 1

|

RAC - 2

|

[grid@rac1 ~]$

exit

su - oracle

pwd

cd /home/oracle

pwd

mkdir .ssh

vi .ssh/config

iHost *

ForwardX11 no

|

[grid@rac2 ~]$

exit

su - oracle

pwd

cd /home/oracle

pwd

mkdir .ssh

vi .ssh/config

iHost *

ForwardX11 no

|

Save and Exit (:wq)

Creation of .bashrc for ORACLE User:

RAC - 1

|

RAC - 2

|

[oracle@rac1 ~]$pwd

/home/oracle

[oracle@rac2 ~]$

vi /home/oracle/.bashrc

20ddi# .bashrc

# User specific

aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global

definitions

if [ -f /etc/bashrc ];

then

. /etc/bashrc

fi

if [ -t 0 ]; then

stty intr ^C

fi

|

[oracle@rac1 ~]$pwd

/home/oracle

[oracle@rac2 ~]$

vi /home/oracle/.bashrc

20ddi# .bashrc

# User specific

aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global

definitions

if [ -f /etc/bashrc ];

then

. /etc/bashrc

fi

if [ -t 0 ]; then

stty intr ^C

fi

|

Save and Exit (:wq)

OracleASM Configration:

RAC - 1

|

RAC - 2

|

[oracle@rac1 ~]$ exit

[root@rac1 rpms]# fdisk -l

Disk /dev/sda: 128.8 GB, 128849018880 bytes

255 heads, 63 sectors/track, 15665 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot

Start

End Blocks Id System

/dev/sda1

*

1

13 104391 83 Linux

/dev/sda2

14 15665

125724690 8e Linux LVM

Disk /dev/sdb: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot

Start

End Blocks Id System

/dev/sdb1

1 2610

20964793+ 83 Linux

[root@rac1 ~]#

[root@rac1 ~]# oracleasm

status

Checking if ASM is loaded: no

Checking if /dev/oracleasm is mounted: no

[root@rac1 ~]# oracleasm

configure -i

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle

ASM library

driver. The following questions will determine

whether the driver is

loaded on boot and what permissions it will have.

The current values

will be shown in brackets ('[]'). Hitting <ENTER>

without typing an

answer will keep that current value. Ctrl-C will

abort.

Default user to own the driver interface []: grid

Default group to own the driver interface []: asmadmin

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [y]: y

Writing Oracle ASM library driver configuration: done

[root@rac1 ~]# oracleasm

status

Checking if ASM is loaded: no

Checking if /dev/oracleasm is mounted: no

|

[oracle@rac2 ~]$ exit

[root@rac2 rpms]# fdisk -l

Disk /dev/sda: 128.8 GB, 128849018880 bytes

255 heads, 63 sectors/track, 15665 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot

Start

End Blocks Id System

/dev/sda1

*

1

13 104391 83 Linux

/dev/sda2

14 15665

125724690 8e Linux LVM

Disk /dev/sdb: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot

Start

End Blocks Id System

/dev/sdb1

1 2610

20964793+ 83 Linux

[root@rac2 ~]#

[root@rac2 ~]# oracleasm

status

Checking if ASM is loaded: no

Checking if /dev/oracleasm is mounted: no

[root@rac2 ~]# oracleasm

configure -i

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle

ASM library

driver. The following questions will determine

whether the driver is

loaded on boot and what permissions it will have.

The current values

will be shown in brackets ('[]'). Hitting

<ENTER> without typing an

answer will keep that current value. Ctrl-C will

abort.

Default user to own the driver interface []:grid

Default group to own the driver interface []:asmadmin

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [y]: y

Writing Oracle ASM library driver configuration: done

[root@rac2 ~]# oracleasm

status

Checking if ASM is loaded: no

Checking if /dev/oracleasm is mounted: no

|

Creation of OracleASM Disk:

RAC - 1

|

RAC - 2

|

[root@rac1 rpms]#

fdisk -l

oracleasm init

oracleasm createdisk

DELLASM /dev/sdb1

oracleasm scandisks

oracleasm listdisks

ll /dev/oracleasm/disks/

pwd oracleasm querydisk -d DATA10 ---> valid/invalid ASM Disk oracleasm deletedisk DELLASM ---> if using an existing DELLASM disk on which GI & DB was already installed before using openfiler. # # lsmod | grep oracleasm oracleasm 69632 1 # sudo systemctl status oracleasm #

|

[root@rac2 rpms]#

oracleasm init

oracleasm scandisks

oracleasm listdisks

ll /dev/oracleasm/disks/

pwd |

Sample output for

RAC-1

[root@rac1 rpm]# oracleasm init

Creating /dev/oracleasm mount point:

/dev/oracleasm

Loading module "oracleasm":

oracleasm

Mounting ASMlib driver filesystem:

/dev/oracleasm

[root@rac1 rpm]# oracleasm

createdisk dellasm /dev/sdb1

Writing disk header: done

Instantiating disk: done

[root@rac1 rpm]# oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks...

Scanning system for ASM disks...

[root@rac1 rpm]# oracleasm listdisks

DELLASM

[root@rac1 rpm]#

total 0

brw-rw---- 1 grid asmadmin 8, 17

Feb 4 07:43 DELLASM

[root@rac1 rpm]# [root@rac1 rpm]# OL 8.10 working kernel & ASM packages for (oracleasm init issue) with Ver:2 [root@uatdb01 ~]# uname -r 4.18.0-477.27.1.el8_8.x86_64 [root@uatdb01 ~]# [root@uatdb01 ~]# rpm -qa | grep oracleasm oracleasmlib-2.0.17-1.el8.x86_64 kmod-redhat-oracleasm-2.0.8-17.0.2.el8.x86_64 oracleasm-support-2.1.12-1.el8.x86_64 [root@uatdb01 ~]# [root@uatdb01 ~]# hostnamectl Operating System: Oracle Linux Server 8.10 CPE OS Name: cpe:/o:oracle:linux:8:10:server Kernel: Linux 4.18.0-477.27.1.el8_8.x86_64 Architecture: x86-64 [root@uatdb01 ~]# for kernel 5.15 required version 3 asm lib and asm support in OL 8.10 no need of kmod in V3 |

|

Sample output for

RAC-2

[root@rac2 u01]# oracleasm init

Creating /dev/oracleasm mount point:

/dev/oracleasm

Loading module "oracleasm":

oracleasm

Mounting ASMlib driver filesystem:

/dev/oracleasm

[root@rac2 u01]# oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks...

Scanning system for ASM disks...

Instantiating disk "DELLASM"

[root@rac2 u01]# oracleasm listdisks

DELLASM

[root@rac1 u01]#

total 0

brw-rw---- 1 grid asmadmin 8, 17

Feb 4 07:43 DELLASM

[root@rac2 u01]# pwd

|

|

/etc/resolv.conf setting:

To over come with prerequisite check "PRVF-5636"

Task resolve.conf Integrity - DNS Responce Time for an unreachable Node

PRVF-5636: The DNS response Time for unreachable node exceed "15000" ms on following nodes rac1, rac2

Task resolve.conf Integrity - DNS Responce Time for an unreachable Node

PRVF-5636: The DNS response Time for unreachable node exceed "15000" ms on following nodes rac1, rac2

RAC - 1

|

RAC - 2

|

[root@rac1 ~]#

[root@rac1 ~]#

vi /etc/resolv.conf 20ddi# Generated by NetworkManager search dell.com nameserver 192.168.1.11 options attempts:2 options timeout:1 # No nameservers found; try putting DNS servers into your # ifcfg files in /etc/sysconfig/network-scripts like so: # # DNS1=xxx.xxx.xxx.xxx # DNS2=xxx.xxx.xxx.xxx # DOMAIN=lab.foo.com bar.foo.com |

[root@rac2 ~]#

[root@rac2 ~]#

vi /etc/resolv.conf

20ddi# Generated by NetworkManager search dell.com nameserver 192.168.1.11 options attempts:2 options timeout:1 # No nameservers found; try putting DNS servers into your # ifcfg files in /etc/sysconfig/network-scripts like so: # # DNS1=xxx.xxx.xxx.xxx # DNS2=xxx.xxx.xxx.xxx # DOMAIN=lab.foo.com bar.foo.com |

ntpd Service Setup/etc/sysconfig/ntpd: Observer/Active Mode

RAC - 1

|

RAC - 2

|

Active Mode Configuration. Deconfigure NTP so the Oracle Cluster Time Synchronization Service (ctssd) can synchronize the times of the RAC nodes. [root@rac1 ~]# [root@rac1 ~]# crsctl check ctss CRS-4700: The Cluster Time Synchronization Service is in Observer mode. [root@rac1 ~]#[root@rac1 ~]# service ntpd stop Shutting down ntpd: [ OK ] [root@rac1 ~]# chkconfig ntpd off [root@rac1 ~]# mv /etc/ntp.conf /etc/ntp.conf.orig [root@rac1 ~]# [root@rac1 ~]# ./runInstaller [root@rac1 ~]# [root@rac1 ~]# crsctl check ctss CRS-4701: The Cluster Time Synchronization Service is in Active mode. CRS-4702: Offset (in msec): 0

[root@rac1 ~]# even after installation of grid also, CTSS deamon can be switch to Active mode. stop ntpd service and rename the ntp.cnf file as shown above. Then enable active mode with below syntax. [grid@rac1 ~]$ cluvfy comp clocksync -n all Verifying Clock Synchronization across the cluster nodes Oracle Clusterware is installed on all nodes. CTSS resource check passed Query of CTSS for time offset passed CTSS is in Active state. Proceeding with check of clock time offsets on all nodes... Check of clock time offsets passed Oracle Cluster Time Synchronization Services check passed Verification of Clock Synchronization across the cluster nodes was successful. [grid@rac1 ~]$ cluvfy comp clocksync -n all -verbose |

Active Mode Configuration. Deconfigure NTP so the Oracle Cluster Time Synchronization Service (ctssd) can synchronize the times of the RAC nodes. [root@rac2 ~]# [root@rac2 ~]# crsctl check ctss CRS-4700: The Cluster Time Synchronization Service is in Observer mode. [root@rac2 ~]# [root@rac2 ~]# service ntpd stop Shutting down ntpd: [ OK ] [root@rac2 ~]# chkconfig ntpd off [root@rac2 ~]# mv /etc/ntp.conf /etc/ntp.conf.orig [root@rac2 ~]# [root@rac2 ~]# [root@rac2 ~]# [root@rac2 ~]# crsctl check ctss CRS-4701: The Cluster Time Synchronization Service is in Active mode. CRS-4702: Offset (in msec): 0

[root@rac2 ~]#

|

Observer Mode Configuration. [root@rac1 rpms]# service ntpd stop cp /etc/sysconfig/ntpd /etc/sysconfig/ntpd_bkp vi /etc/sysconfig/ntpd |

Observer Mode Configuration. [root@rac2 rpms]# service ntpd stop cp /etc/sysconfig/ntpd /etc/sysconfig/ntpd_bkp vi /etc/sysconfig/ntpd |

# Drop root to id 'ntp:ntp' by default. OPTIONS=”-x -u ntp:ntp -p /var/run/ntpd.pid" # Set to 'yes' to sync hw clock after successful ntpdate SYNC_HWCLOCK=no # Additional options for ntpdate NTPDATE_OPTIONS="" |

# Drop root to id 'ntp:ntp' by default. OPTIONS=”-x -u ntp:ntp -p /var/run/ntpd.pid" # Set to 'yes' to sync hw clock after successful ntpdate SYNC_HWCLOCK=no # Additional options for ntpdate NTPDATE_OPTIONS="" |

Save and Exit (:wq) [root@rac1 rpms]# service ntpd start chkconfig ntpd on pwd

|

Save and Exit (:wq) [root@rac2 rpms]# service ntpd start chkconfig ntpd on pwd |

Sample output for

both Nodes for Observer Mode Configuration:

[root@rac1 rpm]# service

ntpd stop

Shutting down

ntpd:

[FAILED]

[root@rac1 rpm]# cp

/etc/sysconfig/ntpd /etc/sysconfig/ntpd_bkp

[root@rac1 rpm]# vi

/etc/sysconfig/ntpd

[root@rac1 rpm]# service

ntpd start

ntpd: Synchronizing with time

server:

[FAILED]

Starting

ntpd:

[ OK ]

[root@rac1 rpm]# chkconfig

ntpd on

[root@rac1 rpm]# pwd

|

|

Setting Date and Time:

RAC - 1

|

RAC - 2

|

[root@rac1 rpms]# watch -n 1 date

Tue May 10 12:16:09 AST 2016

[root@rac1 grid]# date -s "10 May 2016 12:16:00"

Sample query to check db time: SQL> select to_char(sysdate,'DD-MM-YYYY HH24:MI:SS') from dual; TO_CHAR(SYSDATE,'DD ------------------- 10-05-2016 12:16:01 SQL> When database time is running 3 min delay from current time. which was same as in sever i.e 3min delay on server as well. So, once set server time with date -s command, db time automatically updated |

[root@rac2 rpms]# date

Tue May 10 12:16:06 AST 2016

|

Configuration of sshUserSetup.sh (ORACLE METHOD)

In new 11GR2 ssh User equivalence can be setup as below.

Node-2 Machine: RAC2

[root@rac1 ~]# hostname -i

192.168.1.11

[root@rac2 ~]# su - grid

[grid@rac2 .ssh]$[grid@rac2 ~]$ cd .ssh/ [grid@rac2 .ssh]$ ll total 4 -rw-r--r-- 1 grid oinstall 21 Jul 2 16:08 config Node-1 Machine: RAC1

login as: root

root@192.168.1.11's password:

Last login: Mon Feb 20 14:45:28 2017

[root@rac1 ~]# hostname

rac1.dell.com

[root@rac1 ~]#

[root@rac1 ~]# hostname -i

192.168.1.11

[root@rac1 ~]# su - grid [grid@rac1 ~]$ cd .ssh/ [grid@rac1 .ssh]$ ll total 4 -rw-r--r-- 1 grid oinstall 21 Jul 2 16:08 config [grid@rac1 .ssh]$ [grid@rac1 grid]$ ls install response runcluvfy.sh sshsetup welcome.html readme.html rpm runInstaller stage [grid@rac1 grid]$ cd sshsetup/ [grid@rac1 sshsetup]$ ll total 32 -rwxrwxr-x 1 grid oinstall 32343 Aug 26 2013 sshUserSetup.sh [grid@rac1 sshsetup]$ [grid@rac1 sshsetup]$ ./sshUserSetup.sh -user grid -hosts "rac1 rac2" -noPromptPassphrase The output of this script is also logged into /tmp/sshUserSetup_2018-07-02-16-27-50.log Hosts are rac1 rac2 user is grid Platform:- Linux Checking if the remote hosts are reachable PING rac1.dell.com (192.168.1.11) 56(84) bytes of data. 64 bytes from rac1.dell.com (192.168.1.11): icmp_seq=1 ttl=64 time=0.008 ms 64 bytes from rac1.dell.com (192.168.1.11): icmp_seq=2 ttl=64 time=0.034 ms 64 bytes from rac1.dell.com (192.168.1.11): icmp_seq=3 ttl=64 time=0.032 ms 64 bytes from rac1.dell.com (192.168.1.11): icmp_seq=4 ttl=64 time=0.032 ms 64 bytes from rac1.dell.com (192.168.1.11): icmp_seq=5 ttl=64 time=0.032 ms --- rac1.dell.com ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4001ms rtt min/avg/max/mdev = 0.008/0.027/0.034/0.011 ms PING rac2.dell.com (192.168.1.12) 56(84) bytes of data. 64 bytes from rac2.dell.com (192.168.1.12): icmp_seq=1 ttl=64 time=0.270 ms 64 bytes from rac2.dell.com (192.168.1.12): icmp_seq=2 ttl=64 time=0.250 ms 64 bytes from rac2.dell.com (192.168.1.12): icmp_seq=3 ttl=64 time=0.236 ms 64 bytes from rac2.dell.com (192.168.1.12): icmp_seq=4 ttl=64 time=0.252 ms 64 bytes from rac2.dell.com (192.168.1.12): icmp_seq=5 ttl=64 time=0.266 ms --- rac2.dell.com ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4002ms rtt min/avg/max/mdev = 0.236/0.254/0.270/0.023 ms Remote host reachability check succeeded. The following hosts are reachable: rac1 rac2. The following hosts are not reachable: . All hosts are reachable. Proceeding further... firsthost rac1 numhosts 2 The script will setup SSH connectivity from the host rac1.dell.com to all the remote hosts. After the script is executed, the user can use SSH to run commands on the remote hosts or copy files between this host rac1.dell.com and the remote hosts without being prompted for passwords or confirmations. NOTE 1: As part of the setup procedure, this script will use ssh and scp to copy files between the local host and the remote hosts. Since the script does not store passwords, you may be prompted for the passwords during the execution of the script whenever ssh or scp is invoked. NOTE 2: AS PER SSH REQUIREMENTS, THIS SCRIPT WILL SECURE THE USER HOME DIRECTORY AND THE .ssh DIRECTORY BY REVOKING GROUP AND WORLD WRITE PRIVILEDGES TO THESE directories. Do you want to continue and let the script make the above mentioned changes (yes/no)? yes The user chose yes User chose to skip passphrase related questions. Creating .ssh directory on local host, if not present already Creating authorized_keys file on local host Changing permissions on authorized_keys to 644 on local host Creating known_hosts file on local host Changing permissions on known_hosts to 644 on local host Creating config file on local host If a config file exists already at /home/grid/.ssh/config, it would be backed up to /home/grid/.ssh/config.backup. Removing old private/public keys on local host Running SSH keygen on local host with empty passphrase Generating public/private rsa key pair. Your identification has been saved in /home/grid/.ssh/id_rsa. Your public key has been saved in /home/grid/.ssh/id_rsa.pub. The key fingerprint is: e4:96:95:8e:f5:e1:59:13:e3:c0:3b:0b:65:9c:c5:ad grid@rac1.dell.com Creating .ssh directory and setting permissions on remote host rac1 THE SCRIPT WOULD ALSO BE REVOKING WRITE PERMISSIONS FOR group AND others ON THE HOME DIRECTORY FOR grid. THIS IS AN SSH REQUIREMENT. The script would create ~grid/.ssh/config file on remote host rac1. If a config file exists already at ~grid/.ssh/config, it would be backed up to ~grid/.ssh/config.backup. The user may be prompted for a password here since the script would be running SSH on host rac1. Warning: Permanently added 'rac1,192.168.1.11' (RSA) to the list of known hosts. grid@rac1's password:grid Done with creating .ssh directory and setting permissions on remote host rac1. Creating .ssh directory and setting permissions on remote host rac2 THE SCRIPT WOULD ALSO BE REVOKING WRITE PERMISSIONS FOR group AND others ON THE HOME DIRECTORY FOR grid. THIS IS AN SSH REQUIREMENT. The script would create ~grid/.ssh/config file on remote host rac2. If a config file exists already at ~grid/.ssh/config, it would be backed up to ~grid/.ssh/config.backup. The user may be prompted for a password here since the script would be running SSH on host rac2. Warning: Permanently added 'rac2,192.168.1.12' (RSA) to the list of known hosts. grid@rac2's password:grid Done with creating .ssh directory and setting permissions on remote host rac2. Copying local host public key to the remote host rac1 The user may be prompted for a password or passphrase here since the script would be using SCP for host rac1. grid@rac1's password:grid Done copying local host public key to the remote host rac1 Copying local host public key to the remote host rac2 The user may be prompted for a password or passphrase here since the script would be using SCP for host rac2. grid@rac2's password:grid Done copying local host public key to the remote host rac2 cat: /home/grid/.ssh/known_hosts.tmp: No such file or directory cat: /home/grid/.ssh/authorized_keys.tmp: No such file or directory SSH setup is complete. ------------------------------------------------------------------------ Verifying SSH setup =================== The script will now run the date command on the remote nodes using ssh to verify if ssh is setup correctly. IF THE SETUP IS CORRECTLY SETUP, THERE SHOULD BE NO OUTPUT OTHER THAN THE DATE AND SSH SHOULD NOT ASK FOR PASSWORDS. If you see any output other than date or are prompted for the password, ssh is not setup correctly and you will need to resolve the issue and set up ssh again. The possible causes for failure could be: 1. The server settings in /etc/ssh/sshd_config file do not allow ssh for user grid. 2. The server may have disabled public key based authentication. 3. The client public key on the server may be outdated. 4. ~grid or ~grid/.ssh on the remote host may not be owned by grid. 5. User may not have passed -shared option for shared remote users or may be passing the -shared option for non-shared remote users. 6. If there is output in addition to the date, but no password is asked, it may be a security alert shown as part of company policy. Append the additional text to the <OMS HOME>/sysman/prov/resources/ignoreMessages.txt file. ------------------------------------------------------------------------ --rac1:-- Running /usr/bin/ssh -x -l grid rac1 date to verify SSH connectivity has been setup from local host to rac1. IF YOU SEE ANY OTHER OUTPUT BESIDES THE OUTPUT OF THE DATE COMMAND OR IF YOU ARE PROMPTED FOR A PASSWORD HERE, IT MEANS SSH SETUP HAS NOT BEEN SUCCESSFUL. Please note that being prompted for a passphrase may be OK but being prompted for a password is ERROR. Mon Jul 2 16:28:12 AST 2018 ------------------------------------------------------------------------ --rac2:-- Running /usr/bin/ssh -x -l grid rac2 date to verify SSH connectivity has been setup from local host to rac2. IF YOU SEE ANY OTHER OUTPUT BESIDES THE OUTPUT OF THE DATE COMMAND OR IF YOU ARE PROMPTED FOR A PASSWORD HERE, IT MEANS SSH SETUP HAS NOT BEEN SUCCESSFUL. Please note that being prompted for a passphrase may be OK but being prompted for a password is ERROR. Mon Jul 2 16:28:12 AST 2018 ------------------------------------------------------------------------ SSH verification complete. [grid@rac1 sshsetup]$ [grid@rac1 ~]$ ls -ltrh ~/.ssh/* total 24 -rw-r--r-- 1 grid oinstall 456 Jul 2 16:28 authorized_keys -rw-r--r-- 1 grid oinstall 23 Jul 2 16:28 config -rw-r--r-- 1 grid oinstall 21 Jul 2 16:28 config.backup -rw------- 1 grid oinstall 883 Jul 2 16:28 id_rsa -rw-r--r-- 1 grid oinstall 228 Jul 2 16:28 id_rsa.pub -rw-r--r-- 1 grid oinstall 1197 Jul 2 16:28 known_hosts [grid@rac1 .ssh]$ Check similar below files created at node 2: [grid@rac2 .ssh]$ ls -ltrh ~/.ssh/* total 12 -rw-r--r-- 1 grid oinstall 228 Jul 2 16:28 authorized_keys -rw-r--r-- 1 grid oinstall 23 Jul 2 16:28 config -rw-r--r-- 1 grid oinstall 21 Jul 2 16:28 config.backup -rw-r--r-- 1 grid oinstall 0 Jul 2 16:28 known_hosts [grid@rac2 .ssh]$ You should now be able to SSH and SCP between servers without entering passwords. Follow the same for ORACLE User also: [grid@rac1 sshsetup]$ ./sshUserSetup.sh -user oracle -hosts "rac1 rac2" -noPromptPassphrase [grid@rac1 sshsetup]$ ssh oracle@rac2 |

Runcluvy Test on RAC 1:

Node-1 Machine: RAC1 Recheck hostname setting on rac1, rac2 server in Linux 7 (Doc ID 2389622.1) [root@rac1 ~]# cat /etc/hostname localhost.localdomain [root@rac1 ~]# hostnamectl --static localhost [root@rac1 ~]# hostnamectl set-hostname rac1 [root@rac1 ~]# hostnamectl --static rac1 [root@rac1 ~]# cat /etc/hostname

rac1 [grid@rac1 ~]$ cd /u01/sftwr/grid

[grid@rac1 grid]$

It is advisable to run yum -y install again for recommended OS RPMS as per GI docs, to avoid any issue in installation process: (tested while installing 12.1.0.2 GI on Linux 7.5 use yum install to resolve all dependencies) [grid@rac1 grid]$ rpm -qa cvuqdisk rpm -qa binutils rpm -qa compat* rpm -qa gcc* rpm -qa glibc* rpm -qa libaio* rpm -qa ksh rpm -qa make rpm -qa libXi rpm -qa libXtst rpm -qa libgcc rpm -qa libstdc* rpm -qa sysstat rpm -qa nfs-utils rpm -qa ntp rpm -qa oracleasm-support rpm -qa oracleasmlib ##manually download for Linux 7 click here rpm -qa kmod-oracleasm rpm -qa tmux nslookup dellc-scan manually configure DNS Server from (Linux installation) rpm -qa nscd rpm -qa named rpm -qa bind-chroot [root@rac1 ~]# yum -y install xterm* xorg* xauth xclock tmux [grid@rac1 grid]$ [grid@rac1 grid]$ ./runcluvfy.sh stage -pre crsinst -n rac1,rac2 -fixup -verbose [grid@rac1 grid]$ ./runcluvfy.sh

stage -pre crsinst -n rac1,rac2

Performing pre-checks for cluster services setup

Checking node reachability...

Node reachability check passed from node "rac1"

Checking user equivalence...

User equivalence check passed for user "grid"

Checking node connectivity...

Checking hosts config file...

Verification of the hosts config file successful

Node connectivity passed for subnet

"192.168.1.0" with node(s) rac2,rac1

TCP connectivity check passed for subnet

"192.168.1.0"

Node connectivity passed for subnet "10.0.0.0"

with node(s) rac2,rac1

TCP connectivity check passed for subnet

"10.0.0.0"

Node connectivity passed for subnet

"192.168.122.0" with node(s) rac1

TCP connectivity check passed for subnet

"192.168.122.0"

Interfaces found on subnet "192.168.1.0" that

are likely candidates for VIP are:

rac2 eth0:192.168.1.12

rac1 eth0:192.168.1.11

Interfaces found on subnet "10.0.0.0" that are

likely candidates for a private interconnect are:

rac2 eth1:10.0.0.12

rac1 eth1:10.0.0.11

Checking subnet mask consistency...

Subnet mask consistency check passed for subnet

"192.168.1.0".

Subnet mask consistency check passed for subnet

"10.0.0.0".

Subnet mask consistency check passed for subnet

"192.168.122.0".

Subnet mask consistency check passed.

Node connectivity check passed

Checking multicast communication...

Checking subnet "192.168.1.0" for multicast

communication with multicast group "230.0.1.0"...

Check of subnet "192.168.1.0" for multicast

communication with multicast group "230.0.1.0" passed.

Checking subnet "10.0.0.0" for multicast

communication with multicast group "230.0.1.0"...

Check of subnet "10.0.0.0" for multicast

communication with multicast group "230.0.1.0" passed.

Checking subnet "192.168.122.0" for multicast

communication with multicast group "230.0.1.0"...

Check of subnet "192.168.122.0" for multicast

communication with multicast group "230.0.1.0" passed.

Check of multicast communication passed.

Checking ASMLib configuration.

Check for ASMLib configuration passed.

Total memory check passed

Available memory check passed

Swap space check passed

Free disk space check passed for "rac2:/tmp"

Free disk space check passed for "rac1:/tmp"

Check for multiple users with UID value 1101 passed

User existence check passed for "grid"

Group existence check passed for "oinstall"

Group existence check passed for "dba"

Membership check for user "grid" in group

"oinstall" [as Primary] passed

Membership check for user "grid" in group

"dba" passed

Run level check passed

Hard limits check passed for "maximum open file

descriptors"

Soft limits check passed for "maximum open file

descriptors"

Hard limits check passed for "maximum user

processes"

Soft limits check passed for "maximum user

processes"

System architecture check passed

Kernel version check passed

Kernel parameter check passed for "semmsl"

Kernel parameter check passed for "semmns"

Kernel parameter check passed for "semopm"

Kernel parameter check passed for "semmni"

Kernel parameter check passed for "shmmax"

Kernel parameter check passed for "shmmni"

Kernel parameter check passed for "shmall"

Kernel parameter check passed for "file-max"

Kernel parameter check passed for

"ip_local_port_range"

Kernel parameter check passed for "rmem_default"

Kernel parameter check passed for "rmem_max"

Kernel parameter check passed for "wmem_default"

Kernel parameter check passed for "wmem_max"

Kernel parameter check passed for "aio-max-nr"

Package existence check passed for "make"

Package existence check passed for "binutils"

Package existence check passed for "gcc(x86_64)"

Package existence check passed for

"libaio(x86_64)"

Package existence check passed for

"glibc(x86_64)"

Package existence check passed for

"compat-libstdc++-33(x86_64)"

Package existence check passed for

"elfutils-libelf(x86_64)"

Package existence check passed for "elfutils-libelf-devel"

Package existence check passed for

"glibc-common"

Package existence check passed for

"glibc-devel(x86_64)"

Package existence check passed for

"glibc-headers"

Package existence check passed for

"gcc-c++(x86_64)"

Package existence check passed for

"libaio-devel(x86_64)"

Package existence check passed for

"libgcc(x86_64)"

Package existence check passed for

"libstdc++(x86_64)"

Package existence check passed for

"libstdc++-devel(x86_64)"

Package existence check passed for "sysstat"

Package existence check passed for "ksh"

Check for multiple users with UID value 0 passed

Current group ID check passed

Starting check for consistency of primary group of root

user

Check for consistency of root user's primary group passed

Starting Clock synchronization checks using Network Time

Protocol(NTP)...

NTP Configuration file check started...

NTP Configuration file check passed

Checking daemon liveness...

Liveness check passed for "ntpd"

Check for NTP daemon or service alive passed on all nodes

NTP daemon slewing option check passed

NTP daemon's boot time configuration check for slewing

option passed

NTP common Time Server Check started...

Check of common NTP Time Server passed

Clock time offset check from NTP Time Server started...

Clock time offset check passed

Clock synchronization check using Network Time

Protocol(NTP) passed

Core file name pattern consistency check passed.

User "grid" is not part of "root"

group. Check passed

Default user file creation mask check passed

Checking consistency of file "/etc/resolv.conf"

across nodes

File "/etc/resolv.conf" does not have both

domain and search entries defined

domain entry in file "/etc/resolv.conf" is

consistent across nodes

search entry in file "/etc/resolv.conf" is

consistent across nodes

All nodes have one search entry defined in file

"/etc/resolv.conf"

The DNS response time for an unreachable node is within

acceptable limit on all nodes

File "/etc/resolv.conf" is consistent across

nodes

Time zone consistency check passed

Pre-check for cluster services setup was successful.

[grid@rac1 grid]$

[grid@rac1 grid]$

[grid@rac1 grid]$ ./runcluvfy.sh

stage -pre crsinst -n rac1,rac2 -verbose

Performing pre-checks for cluster services setup

Checking node reachability...

Check: Node reachability from node "rac1"

Destination

Node Reachable?

------------------------------------

------------------------

rac2 yes

rac1 yes

Result: Node reachability check passed from node

"rac1"

Checking user equivalence...

Check: User equivalence for user "grid"

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

Result: User equivalence check passed for user

"grid"

Checking node connectivity...

Checking hosts config file...

Node Name Status

------------------------------------

------------------------

rac2 passed

rac1 passed

Verification of the hosts config file successful

Interface information for node "rac2"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------

--------------- --------------- --------------- --------------- -----------------

------

eth0 192.168.1.12 192.168.1.0 0.0.0.0 192.168.1.2 00:0C:29:69:20:80 1500

eth1 10.0.0.12 10.0.0.0 0.0.0.0 192.168.1.2 00:0C:29:69:20:76 1500

Interface information for node "rac1"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------

--------------- --------------- --------------- ---------------

----------------- ------

eth0 192.168.1.11 192.168.1.0 0.0.0.0 192.168.1.2 00:0C:29:F3:1A:CD 1500

eth1 10.0.0.11 10.0.0.0 0.0.0.0 192.168.1.2 00:0C:29:F3:1A:D7 1500

virbr0

192.168.122.1 192.168.122.0 0.0.0.0 192.168.1.2 72:26:D6:9B:0D:B3 1500

Check: Node connectivity of subnet "192.168.1.0"

Source Destination Connected?

------------------------------

------------------------------

----------------

rac2[192.168.1.12]

rac1[192.168.1.11]

yes

Result: Node connectivity passed for subnet

"192.168.1.0" with node(s) rac2,rac1

Check: TCP connectivity of subnet "192.168.1.0"

Source Destination Connected?

------------------------------

------------------------------

----------------

rac1:192.168.1.11

rac2:192.168.1.12

passed

Result: TCP connectivity check passed for subnet

"192.168.1.0"

Check: Node connectivity of subnet "10.0.0.0"

Source Destination Connected?

------------------------------

------------------------------

----------------

rac2[10.0.0.12]

rac1[10.0.0.11]

yes

Result: Node connectivity passed for subnet

"10.0.0.0" with node(s) rac2,rac1

Check: TCP connectivity of subnet "10.0.0.0"

Source Destination Connected?

------------------------------

------------------------------

----------------

rac1:10.0.0.11

rac2:10.0.0.12

passed

Result: TCP connectivity check passed for subnet

"10.0.0.0"

Check: Node connectivity of subnet

"192.168.122.0"

Result: Node connectivity passed for subnet

"192.168.122.0" with node(s) rac1

Check: TCP connectivity of subnet

"192.168.122.0"

Result: TCP connectivity check passed for subnet

"192.168.122.0"

Interfaces found on subnet "192.168.1.0" that

are likely candidates for VIP are:

rac2 eth0:192.168.1.12

rac1 eth0:192.168.1.11

Interfaces found on subnet "10.0.0.0" that are

likely candidates for a private interconnect are:

rac2 eth1:10.0.0.12

rac1 eth1:10.0.0.11

Checking subnet mask consistency...

Subnet mask consistency check passed for subnet

"192.168.1.0".

Subnet mask consistency check passed for subnet

"10.0.0.0".

Subnet mask consistency check passed for subnet

"192.168.122.0".

Subnet mask consistency check passed.

Result: Node connectivity check passed

Checking multicast communication...

Checking subnet "192.168.1.0" for multicast

communication with multicast group "230.0.1.0"...

Check of subnet "192.168.1.0" for multicast

communication with multicast group "230.0.1.0" passed.

Checking subnet "10.0.0.0" for multicast

communication with multicast group "230.0.1.0"...

Check of subnet "10.0.0.0" for multicast

communication with multicast group "230.0.1.0" passed.

Checking subnet "192.168.122.0" for multicast

communication with multicast group "230.0.1.0"...

Check of subnet "192.168.122.0" for multicast

communication with multicast group "230.0.1.0" passed.

Check of multicast communication passed.

Checking ASMLib configuration.

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

Result: Check for ASMLib configuration passed.

Check: Total memory

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 1.9486GB (2043224.0KB) 1.5GB (1572864.0KB) passed

rac1 1.9486GB (2043224.0KB) 1.5GB (1572864.0KB) passed

Result: Total memory check passed

Check: Available memory

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 1.4756GB (1547316.0KB) 50MB (51200.0KB) passed

rac1 1.3349GB (1399792.0KB) 50MB (51200.0KB) passed

Result: Available memory check passed

Check: Swap space

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 9.767GB (1.0241428E7KB) 2.9229GB (3064836.0KB) passed

rac1 29.2933GB (3.0716272E7KB) 2.9229GB (3064836.0KB) passed

Result: Swap space check passed

Check: Free disk space for "rac2:/tmp"

Path Node Name Mount point Available Required Status

----------------

------------ ------------ ------------ ------------ ------------

/tmp rac2 /tmp 9.25GB 1GB passed

Result: Free disk space check passed for

"rac2:/tmp"

Check: Free disk space for "rac1:/tmp"

Path Node Name Mount point Available Required Status

----------------

------------ ------------ ------------ ------------ ------------

/tmp rac1 /tmp 27.7652GB 1GB passed

Result: Free disk space check passed for

"rac1:/tmp"

Check: User existence for "grid"

Node Name Status Comment

------------ ------------------------ ------------------------

rac2 passed exists(1101)

rac1 passed exists(1101)

Checking for multiple users with UID value 1101

Result: Check for multiple users with UID value 1101

passed

Result: User existence check passed for "grid"

Check: Group existence for "oinstall"

Node Name Status Comment

------------ ------------------------ ------------------------

rac2 passed exists

rac1 passed exists

Result: Group existence check passed for

"oinstall"

Check: Group existence for "dba"

Node Name Status Comment

------------ ------------------------ ------------------------

rac2 passed exists

rac1 passed exists

Result: Group existence check passed for "dba"

Check: Membership of user "grid" in group

"oinstall" [as Primary]

Node Name User Exists Group Exists User in Group Primary Status

----------------

------------ ------------ ------------ ------------ ------------

rac2 yes yes yes yes passed

rac1 yes yes yes yes passed

Result: Membership check for user "grid" in

group "oinstall" [as Primary] passed

Check: Membership of user "grid" in group

"dba"

Node Name User Exists Group Exists User in Group Status

----------------

------------ ------------ ------------ ----------------

rac2 yes yes yes passed

rac1 yes yes yes passed

Result: Membership check for user "grid" in

group "dba" passed

Check: Run level

Node Name run level Required Status

------------ ------------------------ ------------------------ ----------

rac2 5 3,5 passed

rac1 5 3,5 passed

Result: Run level check passed

Check: Hard limits for "maximum open file

descriptors"

Node Name Type Available Required Status

----------------

------------ ------------ ------------ ----------------

rac2 hard 131072 65536 passed

rac1 hard 131072 65536 passed

Result: Hard limits check passed for "maximum open

file descriptors"

Check: Soft limits for "maximum open file

descriptors"

Node Name Type Available Required Status

----------------

------------ ------------ ------------ ----------------

rac2 soft 131072 1024 passed

rac1 soft 131072 1024 passed

Result: Soft limits check passed for "maximum open

file descriptors"

Check: Hard limits for "maximum user processes"

Node Name Type Available Required Status

----------------

------------ ------------ ------------ ----------------

rac2 hard 131072 16384 passed

rac1 hard 131072 16384 passed

Result: Hard limits check passed for "maximum user

processes"

Check: Soft limits for "maximum user processes"

Node Name Type Available Required Status

----------------

------------ ------------ ------------ ----------------

rac2 soft 131072 2047 passed

rac1 soft 131072 2047 passed

Result: Soft limits check passed for "maximum user

processes"

Check: System architecture

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 x86_64 x86_64 passed

rac1 x86_64 x86_64 passed

Result: System architecture check passed

Check: Kernel version

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 2.6.32-200.13.1.el5uek 2.6.18 passed

rac1 2.6.32-200.13.1.el5uek 2.6.18 passed

Result: Kernel version check passed

Check: Kernel parameter for "semmsl"

Node Name Current Configured Required Status Comment

----------------

------------ ------------ ------------ ------------ ------------

rac2 250 250 250 passed

rac1 250 250 250 passed

Result: Kernel parameter check passed for

"semmsl"

Check: Kernel parameter for "semmns"

Node Name Current Configured Required Status Comment

----------------

------------ ------------ ------------ ------------ ------------

rac2 32000 32000 32000 passed

rac1 32000 32000 32000 passed

Result: Kernel parameter check passed for

"semmns"

Check: Kernel parameter for "semopm"

Node Name Current Configured Required Status Comment