https://www.oracle.com/database/technologies/oracle19c-linux-downloads.html

Oracle Database 19c Release Update & Release Update Revision July 2021 Known Issues (Doc ID 19202107.9)

Patches to apply before upgrading Oracle GI and DB to 19c or downgrading to previous release (Doc ID 2539751.1)

Interoperability Notes: Oracle E-Business Suite Release 12.2 with Oracle Database 19c (Doc ID 2552181.1)

https://infraxpertzz.com/step-by-step-upgrade-oracle-rac-grid-infrastructure-and-database-from-12c-to-19c/

https://docs.rackspace.com/blog/upgrade-oracle-grid-from-12c-to-19c/

https://www.oracle.com/database/technologies/oracle19c-linux-downloads.html

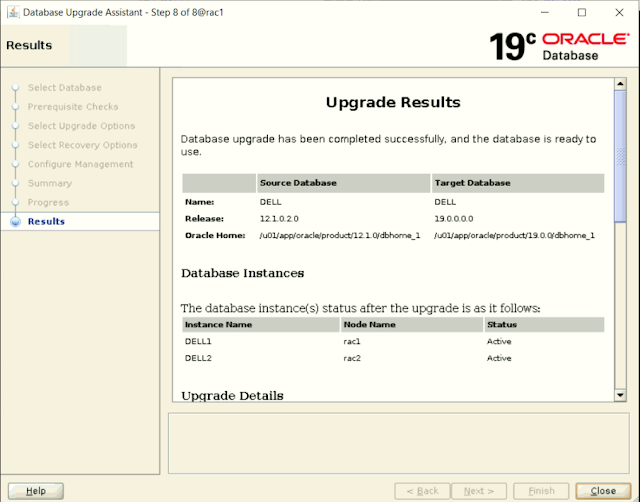

Hi, In this topic we will Upgrade GRID and RDBMS from 12c(12.1.0.2) to 19c(19.12.0.0)

Highlight Steps are are

1. Grid Infrastructure Upgrade from 12.1.0.2 to first 19.3

2. Grid Infrastructure Upgrade from 19.3 to 19.12

3. RDBMS Upgrade from 12.1.0.2 to first 19.3

4. RDBMS Upgrade from 19.3 to 19.12

===================================================

Enable NAT and change to DHCP then download 19c packages

comment the dns line in below file to enable internet in VM later remove comment to reconfigure dns:

grep dns /etc/NetworkManager/NetworkManager.conf

reboot is required to take effect: restart services didnt work:

vi /etc/NetworkManager/NetworkManager.conf

**********************

[main]

dns=none

#plugins=ifcfg-rh,ibft

**********************

service network restart

ping www.google.com

yum

install -y oracle-database-preinstall-19c

[root@rac1 ~]# rpm -qa oracle-*

oracle-logos-70.7.0-1.0.7.el7.noarch

oracle-rdbms-server-12cR1-preinstall-1.0-7.el7.x86_64

oracle-database-preinstall-19c-1.0-3.el7.x86_64

[root@rac1 ~]#

After 19C Pre-install completes restore network adapter to hostonly & check DNS

updated reslove.conf back for dns:

grep dns /etc/NetworkManager/NetworkManager.conf

cat /etc/resolv.conf

search dell.com

nameserver 192.168.1.11

options attempts:2

options timeout:1

Restore back Static IP 192.168.1.11 & 12 then shutdown and change the network adapter to hostonly

Check all responces of ping IP and nslookup then 12.1 ASM and cluster services before proceeding further:

ping 192.168.1.11

ping 192.168.1.12

ping 10.0.0.11

ping 10.0.0.12

ping 192.168.1.40

Commands after Reboot Servers:

iscsiadm -m discovery -t st -p 192.168.1.40

systemctl restart iscsi

oracleasm scandisks

oracleasm listdisks

ls -ltr /dev/oracleasm/disks

nslookup dellc-scan

nslookup rac1

nslookup rac2

systemctl status named

systemctl restart named

systemctl restart ntpd

pwd

Check Status of system services (Active/Enable)

systemctl status named

systemctl status ntpd

pwd

===================================================

Step 1: Upgrade OPatch Version for GRID_HOME, RDBMS_HOME on all nodes RAC 1 and RAC 2

########################################################

#-- update OPatch in GRID_HOME & RDBMS_HOME in (RAC1, RAC2)

[root@rac2 ~]# $ORACLE_HOME/OPatch/opatch version

OPatch Version: 12.1.0.1.3

OPatch succeeded.

[root@rac2 ~]#

#-- OPATCH update for GRID Home

[root@rac1 ~]# .

dell.env

[root@rac1 ~]# mv

$ORACLE_HOME/OPatch $ORACLE_HOME/OPatch_bkp

[root@rac1 ~]# unzip

/u01/sftwr/p6880880_121010_Linux-x86-64.zip -d $ORACLE_HOME

[root@rac1 ~]# chown

-R grid:oinstall $ORACLE_HOME/OPatch

[root@rac1 ~]# chmod

-R 775 $ORACLE_HOME/OPatch

[root@rac1 ~]# $ORACLE_HOME/OPatch/opatch

version

OPatch Version: 12.2.0.1.27

OPatch succeeded.

[root@rac1 ~]#

- Repeat same steps on all

nodes to update GRID HOME OPATCH Version

- Crosscheck OPatch Directory with OPatch_bkp i.e Permission is set properly or NOT

- # chown -R grid:oinstall OPatch

- # chmod -R 775 OPatch

drwxr-xr-x 7 grid oinstall 4096 Sep 19 20:56 OPatch_bkp

drwxr-x--- 15 grid oinstall 4096 Jul 30 17:36 OPatch

-----------------------------------

-- OPATCH update for RDBMS Home

[root@rac1 28553832]# su

- oracle

Last login: Sat Oct 9 12:02:10 +03 2021

[oracle@rac1 ~]$ .

dell.env

[oracle@rac1 ~]$ mv

$ORACLE_HOME/OPatch $ORACLE_HOME/OPatch_bkp

[oracle@rac1 ~]$ unzip

/u01/sftwr/p6880880_121010_Linux-x86-64.zip -d $ORACLE_HOME

- Repeat same steps on all nodes

to update RDBMS HOME OPATCH Version

- Crosscheck OPatch Directory with OPatch_bkp i.e Permission is set properly or NOT

------------------------------------------------------------

NOTE: for below mandatory patch -- The opatch should always be unzip using grid/oracle user the owner of the $ORACLE_HOME other wise it will

fail with unable to copy files from <patch dir> to $ORACLE_HOME.......

C. The patch is stored in a shared NFS location and there is a permission issue accessing the patch

The workaround is to move the patch to a local filesystem and unzip the patch with grid user, retry opatch auto

D. The patch is not unzipped as grid user, often it is unzipped as root user

ls -l <PATCH_UNZIPPED_PATH> will show the files are owned by root user.

The solution is to unzip the patch as grid user into an empty directory outside of GRID_HOME, then retry the patch apply.

Step 2: Apply Grid patch 28553832 individually on all

Nodes with Root User and use opatchauto

run analyze before applying patch so patch applied with in 5 mins only

########################################################

#-- before execution anylze make sure new OPATCH applied to Grid as well as RDBMS or else it gives error (opatchauto failed with error code 42)

------------------------

#-- Make sure before running opatchauto command all the CRS services on all nodes must be UP and running

#-- Unzip patch 28553832 with Grid User

#-- Run Analyze in new session with root user by setting appropriate env. path variable

watch -n 1 'crsctl check cluster -all'

[grid@rac1 sftwr]$ unzip

p28553832_12102190115forOCW_Linux-x86-64.zip -d /u01/sftwr

[grid@rac1 sftwr]$ ls -ltr

total 3042268

drwxr-xr-x 4 grid oinstall 95 Feb 11 2019 28553832

-rwxr-xr-x 1 grid oinstall 396536622 Oct 9 11:40 p28553832_12102190115forOCW_Linux-x86-64.zip

[root@rac1 ~]# .

dell.env

[root@rac1 ~]# $ORACLE_HOME/OPatch/opatch version

OPatch Version: 12.2.0.1.27

OPatch succeeded.

[root@rac1 ~]# export

PATH=$PATH:$ORACLE_HOME/OPatch

[root@rac1 ~]# opatchauto

apply /u01/sftwr/28553832 -analyze

==Following patches were SUCCESSFULLY analyzed to be applied:

Patch: /u01/sftwr/28553832/28553832

Log: /u01/app/oracle/product/12.1.0/dbhome_1/cfgtoollogs/opatchauto/core/opatch/opatch2021-10-09_12-06-26PM_1.log

OPatchauto session completed at Sat Oct 9 12:06:29 2021

Time taken to complete the session 0 minute, 56 seconds

[root@rac1 ~]#

------------------------

[root@rac1 ~]# opatchauto

apply /u01/sftwr/28553832 -oh /u01/app/grid_home -ocmrf

/u01/app/grid_home/OPatch/ocm/bin/emocmrsp

OPatchauto session completed at Sat Oct 9 12:17:29 2021

Time taken to complete the session 5 minutes, 22 seconds

------------------------

-- To check applied patches

[grid@rac1 ~]$ .

dell.env

[grid@rac1 ~]$ $ORACLE_HOME/OPatch/opatch

lsinventory |grep -i 28553832

Patch 28553832 : applied on Sat Oct 09 12:14:02 AST 2021

Patch description: "OCW Interim patch for 28553832"

21976167, 21694632, 23095976, 20115586, 28553832, 20883009, 19164099

[grid@rac1 ~]$

[grid@rac1 ~]$ $ORACLE_HOME/OPatch/opatch

lsinventory |grep -i 21255373

19368917, 21255373, 22393909, 25206650, 25164540, 20579351, 20408163

[grid@rac1 ~]$

#-- Repeat same steps at node 2 to apply GRID patch 28553832

==================================================

If opatchauto fails due to any reason reset patching utility with below commands:

So, it’s most likely some patch completed wrongly. Here is the quick fix:

$ORACLE_HOME/bin/clscfg -patch

[root@rac2 ~]# $ORACLE_HOME/bin/clscfg

-patch

clscfg: -patch mode specified

clscfg: EXISTING configuration version 5 detected.

clscfg: version 5 is 12c Release 1.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

[root@rac2 ~]# $ORACLE_HOME/bin/crsctl

stop rollingpatch

CRS-1171: Rejecting rolling patch mode change because the patch level is not consistent across all nodes in the cluster. The patch level on nodes rac1 is not the same as the patch level [0] found on nodes rac2.

CRS-4000: Command Stop failed, or completed with errors.

[root@rac2 ~]#

STEP 3: UPGRADING GRID from 12.1.0.2 to 19.3.0

########################################################

#-- mkdir -p /u01/app/19_grid_home

#-- Make Sure you made yum install 19c prechecks before proceeding.

yum install -y oracle-database-preinstall-19c

------------------------------------------------------

[root@rac1 ~]# chmod -R 775 /u01/app/

[root@rac1 ~]# su

- grid

Last login: Fri Oct 8 20:38:44 +03 2021 on pts/0

[grid@rac1 ~]$ mkdir

-p /u01/app/19_grid_home

[grid@rac1 ~]$ mkdir -p /u01/app/grid19c (for 19c grid base on rac1)

[grid@rac1 ~]$

[grid@rac2 ~]$ mkdir

-p /u01/app/19_grid_home

[grid@rac2 ~]$ mkdir -p /u01/app/grid19c (for 19c grid base on rac2)

[grid@rac1 ~]$

[grid@rac1 ~]$ unzip

/u01/sftwr/LINUX.X64_193000_grid_home.zip -d /u01/app/19_grid_home/

watch -n 1 'crsctl check cluster -all'

watch -n 1 'srvctl status database -d dell'

crsctl stat res -t -init

srvctl status database -d dell

Instance DELL1 is not running on node rac1

Instance DELL2 is not running on node rac2

[root@rac2 ~]# crsctl

check cluster -all

**************************************************************

rac1:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

rac2:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

------------------------------------------------------------

[root@rac1 rpm]# ls -ltr

-rw-r--r-- 1 grid oinstall 11412 Mar 13 2019 cvuqdisk-1.0.10-1.rpm

[root@rac1 rpm]# pwd

/u01/app/19_grid_home/cv/rpm

[root@rac1 rpm]# rpm -qa cvuqdisk

cvuqdisk-1.0.9-1.x86_64

[root@rac1 rpm]#

[root@rac1 rpm]# export

CVUQDISK_GRP=oinstall

[root@rac2 rpm]# rpm

-ivh cvuqdisk-1.0.10-1.rpm --nodeps --force

Preparing... ################################# [100%]

Updating / installing...

1:cvuqdisk-1.0.10-1 ################################# [100%]

[root@rac2 sftwr]#

[root@rac2 sftwr]# rpm -qa cvuqdisk

cvuqdisk-1.0.10-1.x86_64

[root@rac2 sftwr]#

================================================================================================================

================================================================================================================

[grid@rac1 19_grid_home]$

[grid@rac1 19_grid_home]$ ./runcluvfy.sh

stage -pre crsinst -upgrade -rolling -src_crshome /u01/app/grid_home

-dest_crshome /u01/app/19_grid_home -dest_version 19.0.0.0.0 -fixup -verbose

[root@rac1 sftwr]# /tmp/CVU_19.0.0.0.0_grid/runfixup.sh

All Fix-up operations were completed successfully.

[root@rac2 sftwr]#

#-- Informational Failures result of runcluvfy checks:

Failures were encountered during execution of CVU verification request "stage -pre crsinst".

Verifying Physical Memory ...FAILED

rac2: PRVF-7530 : Sufficient physical memory is not available on node "rac2"

[Required physical memory = 8GB (8388608.0KB)]

rac1: PRVF-7530 : Sufficient physical memory is not available on node "rac1"

[Required physical memory = 8GB (8388608.0KB)]

Verifying ACFS Driver Checks ...FAILED

PRVG-6096 : Oracle ACFS driver is not supported on the current operating system

version for Oracle Clusterware release version "19.0.0.0.0".

Verifying Network Time Protocol (NTP) ...FAILED

Verifying chrony daemon is synchronized with at least one external time

source ...FAILED

rac2: PRVG-13606 : chrony daemon is not synchronized with any external time

source on node "rac2".

rac1: PRVG-13606 : chrony daemon is not synchronized with any external time

source on node "rac1".

Verifying OLR Integrity ...FAILED

rac2: PRVG-2033 : Permissions of file "/u01/app/grid_home/cdata/rac2.olr" did

not match the expected octal value on node "rac2". [Expected = "0600" ;

Found = "0775"]

rac1: PRVG-2033 : Permissions of file "/u01/app/grid_home/cdata/rac1.olr" did

not match the expected octal value on node "rac1". [Expected = "0600" ;

Found = "0775"]

Verifying RPM Package Manager database ...INFORMATION

PRVG-11250 : The check "RPM Package Manager database" was not performed because

it needs 'root' user privileges.

CVU operation performed: stage -pre crsinst

Date: Dec 21, 2021 1:17:56 PM

CVU home: /u01/app/19_grid_home/

User: grid

#########################################################

Solution for Runcluvfy detected Failed:

1. #-- to Fix chrony daemon just resatred ntpd services on both the nodes

Verifying Network Time Protocol (NTP) ...FAILED

Verifying chrony daemon is synchronized with at least one external time

source ...FAILED

PRVG-13606 : chrony daemon is not synchronized with any external time source on node "rac1".

PRVG-13606 : chrony daemon is not synchronized with any external time source on node "rac2".

[root@rac1 ~]# systemctl restart ntpd

[root@rac1 ~]# systemctl enable ntpd

[root@rac2 ~]# systemctl restart ntpd

[root@rac2 ~]# systemctl enable ntpd

#########################################################

2. #-- to Fix OLR Integrity just change permission with 600 and rerun clufy

If OLF Failed with below error then change the permission of olr file on both rac1 and rac2 re-run clufy again to check status

Verifying OLR Integrity ...FAILED

rac2: PRVG-2033 : Permissions of file "/u01/app/grid_home/cdata/rac2.olr" did

not match the expected octal value on node "rac2". [Expected = "0600" ;

Found = "0775"]

cluvfy has passed but runinstaller also not detected olr error

[root@rac1 ~]# chmod -R 0600 /u01/app/grid_home/cdata/rac1.olr

[root@rac2 ~]# chmod -R 0600 /u01/app/grid_home/cdata/rac2.olr

Verifying OLR Integrity ...PASSED

================================================================================================================

================================================================================================================

complete execution of gridSteup.sh (Approx. time is 1Hr 50 Mins with 4GB RAM and 1 processor

#-- Root.sh script log (Executing upgrade step 19 of 19)

================================================================================================================

Steps: Install Grid Software

unset ORACLE_BASE

unset ORACLE_HOME

unset ORACLE_SID

cd /u01/app/19_grid_home/

[root@rac1 ~]# systemctl

status named

[root@rac2 ~]# systemctl

status named

Using Dry-Run Upgrade Mode to Check System Upgrade Readiness:

[grid@rac1 19_grid_home]$ ./gridSetup.sh –dryRunForUpgrade

Actual Upgrade:

[grid@rac1 19_grid_home]$ ./gridSetup.sh

Screen-1 : Upgrade Oracle Grid Infrastructure

Screen-2 : let it use its own already configured ssh conectivity (just Click Next)(Approx. time 5 mins)

Screen-3 : Do not check Register with EM

Screen-4 : Oracle base is /u01/app/grid19c

Run root.sh Scripts when prompted (approx.

[root@rac1 ~]# /u01/app/19_grid_home/rootupgrade.sh (Approx. time 20Mins)

[root@rac2 ~]# /u01/app/19_grid_home/rootupgrade.sh (Approx. time 15Mins)

To check intaller log updates

[root@rac1 ~]# tailf /u01/app/19_grid_home/cfgtoollogs/oracle.crs*.log

================================================================================================================

================================================================================================================

[root@rac1 ~]#

[root@rac1 ~]# grep

grid dell.env

#export ORACLE_HOME=/u01/app/grid_home

export ORACLE_HOME=/u01/app/19_grid_home

crsctl

query crs releasepatch

crsctl query crs releaseversion

crsctl stat res -t

crsctl query crs softwareversion -all

crsctl query crs activeversion -f

---- with grid user we cannot check srvctl but with root it works

[grid@rac2 ~]$ srvctl status database -d dell

PRCD-1229 : An attempt to access configuration of database dell was rejected because its version 12.1.0.2.0 differs from the program version 19.0.0.0.0. Instead run the program from /u01/app/oracle/product/12.1.0/dbhome_1.

[grid@rac2 ~]$

[root@rac2 app]# srvctl status database -d dell

Instance DELL1 is not running on node rac1

Instance DELL2 is not running on node rac2

[root@rac2 app]#

[grid@rac1 ~]$ cat dell.env

#export ORACLE_HOME=/u01/app/grid_home

export ORACLE_HOME=/u01/app/19_grid_home

export PATH=$ORACLE_HOME/bin:$PATH

TNS_ADMIN=$ORACLE_HOME/network/admin

export ORACLE_SID=+ASM1

[grid@rac1 ~]$

[grid@rac1 ~]$ . dell.env

[grid@rac1 ~]$ sqlplus

/ as sysasm

SQL*Plus: Release 19.0.0.0.0 - Production on Fri Oct 8 10:20:52 2021

Version 19.3.0.0.0

Copyright (c) 1982, 2019, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.3.0.0.0

SQL>

==================================================================

------------------------

#-- creation of sample table in u1 schema

grant connect, resource to u1 identified by u1;

alter user u1 quota unlimited on USERS;

conn u1/u1

create table emp (EName varchar(15), ENo number);

insert into emp (EName, ENo) values ('aaa',10);

insert into emp (EName, ENo) values ('bbb',20);

insert into emp (EName, ENo) values ('ccc',30);

commit;

select * from emp;

show user;

STEP 4: upgrade GRID HOME from 19.3 to 19.12

###########################################

#-- unzip patch 19.12 with grid User

------------------------------------------------------

[grid@rac1 sftwr]$ unzip

p32895426_190000_Linux-x86-64.zip

========================================================

Upgrading Opatch for 19C Grid Home on RAC1, RAC2

========================================================

1.2.1.1 OPatch Utility Information

You must use the OPatch utility version 12.2.0.1.25

[root@rac1 ~]# .

dell.env

[root@rac1 ~]# $ORACLE_HOME/OPatch/opatch

version

OPatch Version: 12.2.0.1.17

OPatch succeeded.

[root@rac1 ~]#

[root@rac1 ~]# mv

$ORACLE_HOME/OPatch $ORACLE_HOME/OPatch_bkp

[root@rac1 ~]# unzip

/u01/sftwr/p6880880_121010_Linux-x86-64.zip -d /u01/app/19_grid_home/

[root@rac1 ~]# chown -R grid:oinstall $ORACLE_HOME/OPatch

[root@rac1 ~]# chmod -R 775 $ORACLE_HOME/OPatch

[root@rac1 ~]# $ORACLE_HOME/OPatch/opatch

version

OPatch Version: 12.2.0.1.27

OPatch succeeded.

[root@rac1 ~]#

#-- update OPatch in RAC2 Grid 19C home

========================================================

========================================================

Check Patch

32895426 conflicts on rac1 with grid user before, without $PATH

========================================================

$ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail

-phBaseDir /u01/sftwr/32895426/32904851

$ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail

-phBaseDir /u01/sftwr/32895426/32916816

$ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail

-phBaseDir /u01/sftwr/32895426/32915586

$ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail

-phBaseDir /u01/sftwr/32895426/32918050

$ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail

-phBaseDir /u01/sftwr/32895426/32585572

pwd

[grid@rac1 ~]$ $ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /u01/sftwr/32895426/32904851

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright (c) 2021, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/19_grid_home

Central Inventory : /u01/app/oraInventory

from : /u01/app/19_grid_home/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.7.0

Log file location : /u01/app/19_grid_home/cfgtoollogs/opatch/opatch2021-10-08_10-46-02AM_1.log

Invoking prereq "checkconflictagainstohwithdetail"

Prereq "checkConflictAgainstOHWithDetail" passed.

OPatch succeeded.

[grid@rac1 ~]$

[grid@rac1 ~]$

[grid@rac1 ~]$ $ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /u01/sftwr/32895426/32916816

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright (c) 2021, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/19_grid_home

Central Inventory : /u01/app/oraInventory

from : /u01/app/19_grid_home/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.7.0

Log file location : /u01/app/19_grid_home/cfgtoollogs/opatch/opatch2021-10-08_10-46-23AM_1.log

Invoking prereq "checkconflictagainstohwithdetail"

Prereq "checkConflictAgainstOHWithDetail" passed.

OPatch succeeded.

[grid@rac1 ~]$

========================================================

Run analyze for 19.12 GRID Upgrade Patch 32895426 on

rac1 before without $PATH

========================================================

1.2.2 One-off Patch Conflict Detection and Resolution

$ORACLE_HOME/OPatch/opatchauto apply /u01/sftwr/32895426 -analyze

[grid@rac1 ~]$ $

[root@rac1 ~]# grep PATH ~/*.env

#export PATH=$ORACLE_HOME/bin:$PATH

[root@rac1 ~]#

[root@rac1 ~]#

[root@rac1 ~]# echo $PATH

/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin

[root@rac1 ~]# echo $PATH

/u01/app/19_grid_home/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin

[root@rac1 ~]# comment PATH from grid.env and re-login in new root session

[root@rac1 ~]# echo $PATH

/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin

[root@rac1 ~]# export ORACLE_HOME=/u01/app/19_grid_home

[root@rac1 ~]# $ORACLE_HOME/OPatch/opatchauto

apply /u01/sftwr/32895426 -analyze

OPatchauto session is initiated at Fri Oct 8 10:57:55 2021

System initialization log file is /u01/app/19_grid_home/cfgtoollogs/opatchautodb/systemconfig2021-10-08_10-58-03AM.log.

Session log file is /u01/app/19_grid_home/cfgtoollogs/opatchauto/opatchauto2021-10-08_10-58-59AM.log

The id for this session is 14W4

Executing OPatch prereq operations to verify patch applicability on home /u01/app/19_grid_home

Patch applicability verified successfully on home /u01/app/19_grid_home

Executing patch validation checks on home /u01/app/19_grid_home

Patch validation checks successfully completed on home /u01/app/19_grid_home

OPatchAuto successful.

--------------------------------Summary--------------------------------

Analysis for applying patches has completed successfully:

Host:rac1

CRS Home:/u01/app/19_grid_home

Version:19.0.0.0.0

==Following patches were SUCCESSFULLY analyzed to be applied:

Patch: /u01/sftwr/32895426/32916816

Log: /u01/app/19_grid_home/cfgtoollogs/opatchauto/core/opatch/opatch2021-10-08_10-59-46AM_1.log

Patch: /u01/sftwr/32895426/32915586

Log: /u01/app/19_grid_home/cfgtoollogs/opatchauto/core/opatch/opatch2021-10-08_10-59-46AM_1.log

Patch: /u01/sftwr/32895426/32585572

Log: /u01/app/19_grid_home/cfgtoollogs/opatchauto/core/opatch/opatch2021-10-08_10-59-46AM_1.log

Patch: /u01/sftwr/32895426/32918050

Log: /u01/app/19_grid_home/cfgtoollogs/opatchauto/core/opatch/opatch2021-10-08_10-59-46AM_1.log

Patch: /u01/sftwr/32895426/32904851

Log: /u01/app/19_grid_home/cfgtoollogs/opatchauto/core/opatch/opatch2021-10-08_10-59-46AM_1.log

Following homes are skipped during patching as patches are not applicable:

/u01/app/oracle/product/12.1.0/dbhome_1

OPatchauto session completed at Fri Oct 8 11:01:25 2021

Time taken to complete the session 3 minutes, 30 seconds

[root@rac1 ~]#

========================================================

completed in 4 Mins Run analyze for 19.12 GRID Upgrade Patch 32895426 on rac1 before without $PATH

========================================================

[grid@rac1 ~]$

$ORACLE_HOME/OPatch/opatch lspatches

$ORACLE_HOME/OPatch/opatch lsinventory | grep description

$ORACLE_HOME/OPatch/opatch lsinventory | grep applied

pwd

------------------------------------------------------------

========================================================

Run 19.12 GRID Upgrade Patch 32895426 on rac1 before

set $PATH

Also verify how many homes are registered with in Global & local inventory.

cat /etc/oraInst.loc

cat /u01/app/grid_home/oraInst.loc

========================================================

1.2.4 opatchauto ---- (Approx. time 25Mins with 4GB Ram and 1 process, and on node2 with/without running analyze took 60 Mins)

The utility can be run in parallel on the cluster nodes except for the first (any) node.

[root@rac1 ~]# crsctl check cluster -all

**************************************************************

rac1:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

rac2:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

Before applying 19.12 patch make tar backup of Oracle Home:

open new terminal and apply patch with correct path

[root@rac1 ~]# export ORACLE_HOME=/u01/app/19_grid_home

[root@rac1 ~]# echo $PATH

/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin

[root@rac1 ~]# export PATH=$PATH:$ORACLE_HOME/OPatch

[root@rac1 ~]# echo $PATH

/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin:/u01/app/19_grid_home/OPatch

[root@rac1 ~]#

[root@rac1 ~]# $ORACLE_HOME/OPatch/opatchauto

apply /u01/sftwr/32895426 -oh /u01/app/19_grid_home

OPatchauto session is initiated at Fri Oct 8 11:05:38 2021

System initialization log file is /u01/app/19_grid_home/cfgtoollogs/opatchautodb/systemconfig2021-10-08_11-05-47AM.log.

Session log file is /u01/app/19_grid_home/cfgtoollogs/opatchauto/opatchauto2021-10-08_11-06-06AM.log

The id for this session is BEV4

Executing OPatch prereq operations to verify patch applicability on home /u01/app/19_grid_home

Patch applicability verified successfully on home /u01/app/19_grid_home

Executing patch validation checks on home /u01/app/19_grid_home

Patch validation checks successfully completed on home /u01/app/19_grid_home

Performing prepatch operations on CRS - bringing down CRS service on home /u01/app/19_grid_home

Prepatch operation log file location: /u01/app/grid/crsdata/rac1/crsconfig/crs_prepatch_apply_inplace_rac1_2021-10-08_11-07-58AM.log

CRS service brought down successfully on home /u01/app/19_grid_home

Start applying binary patch on home /u01/app/19_grid_home

Binary patch applied successfully on home /u01/app/19_grid_home

Performing postpatch operations on CRS - starting CRS service on home /u01/app/19_grid_home

Postpatch operation log file location: /u01/app/grid/crsdata/rac1/crsconfig/crs_postpatch_apply_inplace_rac1_2021-10-08_11-21-54AM.log

CRS service started successfully on home /u01/app/19_grid_home

OPatchAuto successful.

--------------------------------Summary--------------------------------

Patching is completed successfully. Please find the summary as follows:

Host:rac1

CRS Home:/u01/app/19_grid_home

Version:19.0.0.0.0

Summary:

==Following patches were SUCCESSFULLY applied:

Patch: /u01/sftwr/32895426/32585572

Log: /u01/app/19_grid_home/cfgtoollogs/opatchauto/core/opatch/opatch2021-10-08_11-11-51AM_1.log

Patch: /u01/sftwr/32895426/32904851

Log: /u01/app/19_grid_home/cfgtoollogs/opatchauto/core/opatch/opatch2021-10-08_11-11-51AM_1.log

Patch: /u01/sftwr/32895426/32915586

Log: /u01/app/19_grid_home/cfgtoollogs/opatchauto/core/opatch/opatch2021-10-08_11-11-51AM_1.log

Patch: /u01/sftwr/32895426/32916816

Log: /u01/app/19_grid_home/cfgtoollogs/opatchauto/core/opatch/opatch2021-10-08_11-11-51AM_1.log

Patch: /u01/sftwr/32895426/32918050

Log: /u01/app/19_grid_home/cfgtoollogs/opatchauto/core/opatch/opatch2021-10-08_11-11-51AM_1.log

OPatchauto session completed at Fri Oct 8 11:30:33 2021

Time taken to complete the session 24 minutes, 56 seconds

[root@rac1 ~]#

========================================================

(Approx. time 25 Mins)

Completed in 25 Mins: Run 19.12 GRID Upgrade Patch 32895426 on rac1 before set $PATH

========================================================

#-- Validate 19.12 Grid Home patches Status form

lsinventory

[root@rac1 ~]# . dell.env

[root@rac1 ~]# su - grid

Last login: Fri Oct 8 11:36:53 +03 2021

[grid@rac1 ~]$ . dell.env

crsctl query crs releasepatch

crsctl query crs releaseversion

crsctl stat res -t

crsctl query crs softwareversion -all

crsctl query crs activeversion -f

[grid@rac1 ~]$ crsctl query crs releasepatch

Oracle Clusterware release patch level is [3998055650] and the complete list of patches [32585572 32904851 32915586 32916816 32918050 ] have been applied on the local node. The release patch string is [19.12.0.0.0].

[grid@rac1 ~]$

[grid@rac1 ~]$ sqlplus

/ as sysasm

SQL*Plus: Release 19.0.0.0.0 - Production on Fri Oct 8 11:38:51 2021

Version 19.12.0.0.0

Copyright (c) 1982, 2021, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.12.0.0.0

SQL> exit

Disconnected from Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.12.0.0.0

[grid@rac1 ~]$

-----------------------------------------------------------------

all the patches listed in patch folder of GRID-19.12 (32895426) are applied to GRID HOME can be checked with grid user

[grid@rac1 ~]$

$ORACLE_HOME/OPatch/opatch lspatches

$ORACLE_HOME/OPatch/opatch lsinventory | grep description

$ORACLE_HOME/OPatch/opatch lsinventory | grep applied

pwd

$ORACLE_HOME/OPatch/opatch lsinventory | grep 32895426

$ORACLE_HOME/OPatch/opatch lsinventory | grep 32918050

$ORACLE_HOME/OPatch/opatch lsinventory | grep 32916816

$ORACLE_HOME/OPatch/opatch lsinventory | grep 32915586

$ORACLE_HOME/OPatch/opatch lsinventory | grep 32904851

$ORACLE_HOME/OPatch/opatch lsinventory | grep 32585572

pwd

#-- Repeate same on node 2

1. Run analyze patch

2. Apply patch with correct $PATH

3. If got any problem in applying grid upgrade patch due to additional registered homes in Global inventory then take backup an remove unwanted home like 12.1.0.2 (EBS Home)

STEP 5: Database (RDBMS) upgrade from 12.1.0.2 to

19.3-->19.12

####################################################

#-- unzip 19.3 home with ORACLE User

#-- make 19.3 home directory on node 2 with oracle user

------------------------------------------------------

SQL> select

count(*) from dba_objects where status='INVALID';

COUNT(*)

----------

0

SQL>

[oracle@rac1 ~]$ mkdir

-p /u01/app/oracle/product/19.0.0/dbhome_1

[oracle@rac2

~]$ mkdir -p

/u01/app/oracle/product/19.0.0/dbhome_1

[oracle@rac1 ~]$ unzip

/u01/sftwr/LINUX.X64_193000_db_home.zip -d

/u01/app/oracle/product/19.0.0/dbhome_1/

[oracle@rac1 ~]$ cat dell.env

export ORACLE_HOME=/u01/app/oracle/product/12.1.0/dbhome_1

export PATH=$ORACLE_HOME/bin:$PATH

TNS_ADMIN=$ORACLE_HOME/network/admin

LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib/usr/lib; export LD_LIBRARY_PATH

export ORACLE_SID=DELL1

[oracle@rac1 ~]$ .

dell.env

[oracle@rac1 ~]$

[oracle@rac1 ~]$ srvctl

stop database -d dell

[oracle@rac1 ~]$ srvctl status database -d dell

Instance DELL1 is not running on node rac1

Instance DELL2 is not running on node rac2

[oracle@rac1 ~]$ cd

/u01/app/oracle/product/19.0.0/dbhome_1

[oracle@rac1 dbhome_1]$ ./runInstaller (Approx. time 26Mins with 4GB RAM and 1 processor)

Select configuration options from the installer screens

1. On “Select Configuration Option” screen, select "Setup Software only", and then click Next.

2. On “Select Database Installation Option”, select "Oracle Real Application Clusters database installation" and click Next

3. On "Select List of Nodes" screen, verify all database servers in your cluster are present in the list and are selected, and then click Next.

4. On "Select Database Edition", select ‘Enterprise Edition’, then click Next.

5. On "Specify Installation Location" screen, choose “Oracle Base” and change the software location. The ORACLE_HOME directory can’t be chosen. It shows software location: /u01/app/oracle/product/19c/db_1 as the Software Location for the Database from where you started runInstaller from, and then click Next.

ORACLE BASE= /u01/app/oracle

6. On “Operating System Groups” screen, verify group names, and then click Next.

OS User (oracle) Groups= oinstal

7. On “Root script execution” screen, do not check the box. Keep root execution in your own control.

8. On "Prerequisite Checks" screen, verify there are no failed checks or warnings.

9. On "Summary" screen, verify information presented about installation, and then click Install.

10. On “Execute Configuration scripts screen, execute root.sh on each database server as instructed, and then click OK.

11. On “Finish screen”, click Close.

[root@rac1 ~]# /u01/app/oracle/product/19.0.0/dbhome_1/root.sh

[root@rac2 ~]# /u01/app/oracle/product/19.0.0/dbhome_1/root.sh

#################################################################

APEX Upgrade Can Be ignored and proceed :

APEX upgrade sesion got dissconeccted after running for 45 mins so created some invalid objects

-----------------------------------

SQL> SELECT VERSION, STATUS, SCHEMA FROM DBA_REGISTRY WHERE COMP_ID = 'APEX';

VERSION STATUS

------------------------------ --------------------------------------------

SCHEMA

--------------------------------------------------------------------------------

4.2.5.00.08 VALID

APEX_040200

SQL>

[oracle@rac1 dbhome_1]$ mv apex apex_old

[oracle@rac1 dbhome_1]$ unzip -q /u01/sftwr/apex_18.2_en.zip

[oracle@rac1 dbhome_1]$ cd apex

[oracle@rac1 apex]$ ls -ltr

[oracle@rac1 apex]$ sqlplus / as sysdba

SQL*Plus: Release 12.1.0.2.0 Production on Fri Oct 8 15:40:38 2021

Copyright (c) 1982, 2014, Oracle. All rights reserved.

Connected to:

Oracle Database 12c Enterprise Edition Release 12.1.0.2.0 - 64bit Production

With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP,

Advanced Analytics and Real Application Testing options

SQL> @apexins.sql SYSAUX SYSAUX TEMP /i/

#################################################################

STEP 6: After Run install finish 1'st run Pre-upgrade tasks then dbua (Approx. time 1hr 45Mins for dbua with 4GB RAM and 1 processor)

#################################################################

###############################################################################

#-- Run pre-upgrade script

[oracle@rac1 ~]$ mkdir -p /u01/app/oracle/preupgrade

[oracle@rac1 ~]$ ls -ltrh /u01/app/oracle/product/19.0.0/dbhome_1/rdbms/admin/preupgrade.jar

[oracle@rac1 ~]$

[oracle@rac1 ~]$ /u01/app/oracle/product/12.1.0/dbhome_1/jdk/bin/java -jar /u01/app/oracle/product/19.0.0/dbhome_1/rdbms/admin/preupgrade.jar FILE DIR /u01/app/oracle/preupgrade

[oracle@rac1 ~]$

[oracle@rac1 ~]$ cat /u01/app/oracle/preupgrade/preupgrade.log

SQL>

Connected to:

Oracle Database 12c Enterprise Edition Release 12.1.0.2.0 - 64bit Production

SQL> @/u01/app/oracle/preupgrade/preupgrade_fixups.sql

#-- Gather DICTIONARY STATS:

SQL>

SET ECHO ON;

SET SERVEROUTPUT ON;

EXECUTE DBMS_STATS.GATHER_DICTIONARY_STATS;

#-- Purge Recycle bin:

SQL> purge recycle bin

#-- Refresh MVs

SQL> SELECT o.name FROM sys.obj$ o, sys.user$ u, sys.sum$ s WHERE o.type# = 42 AND bitand(s.mflags, 8) =8;

no rows selected

SQL>

SQL>

declare

list_failures integer(3) :=0;

begin

DBMS_MVIEW.REFRESH_ALL_MVIEWS(list_failures,'C','', TRUE, FALSE);

end;

/

#-- Create Restore point:

SQL> create restore point pre_upgrade guarantee flashback database;

SQL>

SQL> select * from v$restore_point;

###############################################################################

[oracle@rac1 bin]$ pwd

/u01/app/oracle/product/19.0.0/dbhome_1/bin

[oracle@rac1 bin]$ echo $ORACLE_HOME

/u01/app/oracle/product/12.1.0/dbhome_1

[oracle@rac1 bin]$ ./dbua

12.1.0 SYSDBA user: sys/oracle

[oracle@rac1 ~]$ cat /etc/oratab | grep -i DELL

DELL:/u01/app/oracle/product/19.0.0/dbhome_1:N

[oracle@rac1 ~]$ sqlplus

/ as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Fri Oct 8 18:09:48 2021

Version 19.3.0.0.0

Copyright (c) 1982, 2019, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.3.0.0.0

SQL> select

* from v$version;

BANNER

--------------------------------------------------------------------------------

BANNER_FULL

----------------------------------------------------------------------------------------------------------------------------------------------------------------

BANNER_LEGACY CON_ID

-------------------------------------------------------------------------------- ----------

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.3.0.0.0

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production 0

SQL>

SQL> select

count(*) from dba_objects where status='INVALID';

COUNT(*)

----------

0

SQL> select name,open_mode,version from v$database,v$instance;

SQL>

set pagesize 400 linesize 200;

col COMP_ID for a15;

col COMP_NAME for a35;

col SCHEMA for a10;

col VERSION for a15;

col VERSION_FULL for a15;

select

comp_id,comp_name,schema,version,version_full,status from dba_registry;COMP_ID COMP_NAME SCHEMA VERSION VERSION_FULL STATUS

--------------- ----------------------------------- ---------- --------------- --------------- -----------

CATALOG Oracle Database Catalog Views SYS 19.0.0.0.0 19.18.0.0.0 VALID

CATPROC Oracle Database Packages and Types SYS 19.0.0.0.0 19.18.0.0.0 VALID

RAC Oracle Real Application Clusters SYS 19.0.0.0.0 19.18.0.0.0 VALID

JAVAVM JServer JAVA Virtual Machine SYS 19.0.0.0.0 19.18.0.0.0 VALID

XML Oracle XDK SYS 19.0.0.0.0 19.18.0.0.0 VALID

CATJAVA Oracle Database Java Packages SYS 19.0.0.0.0 19.18.0.0.0 VALID

APS OLAP Analytic Workspace SYS 19.0.0.0.0 19.18.0.0.0 VALID

XDB Oracle XML Database XDB 19.0.0.0.0 19.18.0.0.0 VALID

OWM Oracle Workspace Manager WMSYS 19.0.0.0.0 19.18.0.0.0 VALID

CONTEXT Oracle Text CTXSYS 19.0.0.0.0 19.18.0.0.0 VALID

ORDIM Oracle Multimedia ORDSYS 19.0.0.0.0 19.18.0.0.0 VALID

SDO Spatial MDSYS 19.0.0.0.0 19.18.0.0.0 VALID

XOQ Oracle OLAP API OLAPSYS 19.0.0.0.0 19.18.0.0.0 VALID

DV Oracle Database Vault DVSYS 19.0.0.0.0 19.18.0.0.0 VALID

OLS Oracle Label Security LBACSYS 19.0.0.0.0 19.18.0.0.0 VALID

15 rows selected.

SQL>

SQL> select

description from dba_registry_sqlpatch;

DESCRIPTION

--------------------------------------------------------------------------------

Database Release Update : 19.3.0.0.190416 (29517242)

SQL>

#-- Run postupgrade_fixups.sql, this script already ran by DBUA under the post-upgrade section. However, we have executed it again:

SQL> @/u01/app/oracle/preupgrade/postupgrade_fixups.sql

#-- Good practice - Change this parameter only after 1 month of database upgrade!

SQL> show parameter COMPATIBLE

SQL> ALTER SYSTEM SET COMPATIBLE = '19.0.0' SCOPE=SPFILE;

SQL> shut immediate;

SQL> startup;

[root@rac1 ~]# srvctl

status database -d dell

Instance DELL1 is running on node rac1

Instance DELL2 is running on node rac2

[root@rac1 ~]#

[root@rac1 ~]# srvctl config all

Oracle Clusterware configuration details

========================================

Oracle Clusterware basic information

------------------------------------

Operating system Linux

Name dellc

Class STANDALONE

Cluster nodes rac1, rac2

Version 19.0.0.0.0

[root@rac1 ~]# srvctl config database -d dell

Database unique name: DELL

Database name: DELL

Oracle home: /u01/app/oracle/product/19.0.0/dbhome_1

Oracle user: oracle

Spfile: +DATA/DELL/PARAMETERFILE/spfileDELL.ora

Password file: +DATA/DELL/PASSWORD/pwddell.276.1083756719

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools:

Disk Groups: DATA

Mount point paths:

Services:

Type: RAC

Start concurrency:

Stop concurrency:

OSDBA group: dba

OSOPER group: oinstall

Database instances: DELL1,DELL2

Configured nodes: rac1,rac2

CSS critical: no

CPU count: 0

Memory target: 0

Maximum memory: 0

Default network number for database services:

Database is administrator managed

[root@rac1 ~]#

STEP 7: Database (RDBMS) upgrade from 19.3 to

19.12

####################################################

---------------

======================================================================

#-- Upgrade OPatch of 19.3 RDBMS HOME in both Rac1, RAC2

[oracle@rac1 ~]$ cat dell.env

#export ORACLE_HOME=/u01/app/oracle/product/12.1.0/dbhome_1

export ORACLE_HOME=/u01/app/oracle/product/19.0.0/dbhome_1

export PATH=$ORACLE_HOME/bin:$PATH

TNS_ADMIN=$ORACLE_HOME/network/admin

LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib/usr/lib; export LD_LIBRARY_PATH

export ORACLE_SID=DELL2

[oracle@rac1 ~]$ . dell.env

[oracle@rac1 ~]$ echo $ORACLE_HOME

/u01/app/oracle/product/19.0.0/dbhome_1

[oracle@rac1 ~]$ $ORACLE_HOME/OPatch/opatch

version

OPatch Version: 12.2.0.1.17

OPatch succeeded.

[oracle@rac1 ~]$

[oracle@rac1 ~]$ mv

$ORACLE_HOME/OPatch $ORACLE_HOME/OPatch_bkp

[oracle@rac1 ~]$ unzip

/u01/sftwr/p6880880_121010_Linux-x86-64.zip -d $ORACLE_HOME

[oracle@rac1 ~]$ $ORACLE_HOME/OPatch/opatch

version

OPatch Version: 12.2.0.1.27

OPatch succeeded.

[oracle@rac1 ~]$

#-- update OPatch for 19.3 RDBMS in RAC2 also

======================================================================

unable to execute analyze from RDBMS #GRID_HOME/OPatch/opatchauto apply /u01/sftwr/32895426 -analyze

$ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /u01/sftwr/32895426/32904851

$ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /u01/sftwr/32895426/32916816

======================================================================

========================================================

Run check conflicts for 19.12 RDBMS Upgrade Patch

32895426 on rac1 without set $PATH

========================================================

[oracle@rac1 ~]$ $ORACLE_HOME/OPatch/opatch

prereq CheckConflictAgainstOHWithDetail -phBaseDir /u01/sftwr/32895426/32904851

Oracle Interim Patch Installer version 12.2.0.1.27

Copyright (c) 2021, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/oracle/product/19.0.0/dbhome_1

Central Inventory : /u01/app/oraInventory

from : /u01/app/oracle/product/19.0.0/dbhome_1/oraInst.loc

OPatch version : 12.2.0.1.27

OUI version : 12.2.0.7.0

Log file location : /u01/app/oracle/product/19.0.0/dbhome_1/cfgtoollogs/opatch/opatch2021-10-08_19-20-53PM_1.log

Invoking prereq "checkconflictagainstohwithdetail"

Prereq "checkConflictAgainstOHWithDetail" passed.

OPatch succeeded.

[oracle@rac1 ~]$

---- check patch confilict with opatch command

cd 32904851

opatch prereq CheckConflictAgainstOHWithDetail -ph ./

[oracle@rac1 ~]$ $ORACLE_HOME/OPatch/opatch

prereq CheckConflictAgainstOHWithDetail -phBaseDir /u01/sftwr/32895426/32916816

========================================================

(Approx. 10 Sec)

Completed in 10 Sec: Run check conflicts for 19.12 RDBMS Upgrade Patch 32895426 on rac1 without set $PATH

========================================================

========================================================

Run 19.12 RDBMS Upgrade Patch 32895426 on rac1 with

root user before set $PATH

apply corresponding OJVM as per applying PSU as per PSU Patch README

3.1.1 Applying Database 19.x Updates/Revisions with Oracle JavaVM 19.x Updates

Oracle Database 19c Proactive Patch Information (Doc ID 2521164.1)

========================================================

Before applying 19.12 patch make tar backup of Oracle Home:

analyze will not work for 19.12 RDBMS Upgrade Patch 32895426 by setting RDBMS HOME

[root@rac1 ~]# export ORACLE_HOME=/u01/app/oracle/product/19.0.0/dbhome_1

[root@rac1 ~]# echo $ORACLE_HOME

/u01/app/oracle/product/19.0.0/dbhome_1

[root@rac1 ~]#

[root@rac1 ~]# echo $PATH

/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin

[root@rac1 ~]#

[root@rac1 ~]# $ORACLE_HOME/OPatch/opatchauto apply /u01/sftwr/32895426 -analyze

opatchauto must run from Grid Home with current arguments. Please retry it inside Grid Home

opatchauto returns with error code = 2

[root@rac1 ~]#

Apply 19.12 RDBMS Upgrade Patch 32895426 on rac1 before with $PATH

[root@rac1 ~]# srvctl status database -d $ORACLE_UNQNAME

Instance DELL1 is running on node rac1

Instance DELL2 is running on node rac2

[root@rac1 ~]# export ORACLE_HOME=/u01/app/oracle/product/19.0.0/dbhome_1

[root@rac1 ~]# export PATH=$PATH:$ORACLE_HOME/OPatch

[root@rac1 ~]# echo $PATH

/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin:/u01/app/oracle/product/19.0.0.0/dbhome_1/OPatch

[root@rac1 ~]#

[root@rac1 ~]# $ORACLE_HOME/OPatch/opatchauto

apply /u01/sftwr/32895426 -oh /u01/app/oracle/product/19.0.0/dbhome_1

OPatchauto session is initiated at Thu Dec 23 14:53:42 2021

System initialization log file is /u01/app/oracle/product/19.0.0/dbhome_1/cfgtoollogs/opatchautodb/systemconfig2021-12-23_02-53-48PM.log.

Session log file is /u01/app/oracle/product/19.0.0/dbhome_1/cfgtoollogs/opatchauto/opatchauto2021-12-23_02-54-30PM.log

The id for this session is REXC

Executing OPatch prereq operations to verify patch applicability on home /u01/app/oracle/product/19.0.0/dbhome_1

Patch applicability verified successfully on home /u01/app/oracle/product/19.0.0/dbhome_1

Executing patch validation checks on home /u01/app/oracle/product/19.0.0/dbhome_1

Patch validation checks successfully completed on home /u01/app/oracle/product/19.0.0/dbhome_1

Verifying SQL patch applicability on home /u01/app/oracle/product/19.0.0/dbhome_1

SQL patch applicability verified successfully on home /u01/app/oracle/product/19.0.0/dbhome_1

Preparing to bring down database service on home /u01/app/oracle/product/19.0.0/dbhome_1

Successfully prepared home /u01/app/oracle/product/19.0.0/dbhome_1 to bring down database service

Bringing down database service on home /u01/app/oracle/product/19.0.0/dbhome_1

Following database(s) and/or service(s) are stopped and will be restarted later during the session: dell

Database service successfully brought down on home /u01/app/oracle/product/19.0.0/dbhome_1

Performing prepatch operation on home /u01/app/oracle/product/19.0.0/dbhome_1

Perpatch operation completed successfully on home /u01/app/oracle/product/19.0.0/dbhome_1

Start applying binary patch on home /u01/app/oracle/product/19.0.0/dbhome_1

Binary patch applied successfully on home /u01/app/oracle/product/19.0.0/dbhome_1

Performing postpatch operation on home /u01/app/oracle/product/19.0.0/dbhome_1

Postpatch operation completed successfully on home /u01/app/oracle/product/19.0.0/dbhome_1

Starting database service on home /u01/app/oracle/product/19.0.0/dbhome_1

Database service successfully started on home /u01/app/oracle/product/19.0.0/dbhome_1

Preparing home /u01/app/oracle/product/19.0.0/dbhome_1 after database service restarted

No step execution required.........

Trying to apply SQL patch on home /u01/app/oracle/product/19.0.0/dbhome_1

SQL patch applied successfully on home /u01/app/oracle/product/19.0.0/dbhome_1

OPatchAuto successful.

--------------------------------Summary--------------------------------

Patching is completed successfully. Please find the summary as follows:

Host:rac1

RAC Home:/u01/app/oracle/product/19.0.0/dbhome_1

Version:19.0.0.0.0

Summary:

==Following patches were SKIPPED:

Patch: /u01/sftwr/32895426/32915586

Reason: This patch is not applicable to this specified target type - "rac_database"

Patch: /u01/sftwr/32895426/32585572

Reason: This patch is not applicable to this specified target type - "rac_database"

Patch: /u01/sftwr/32895426/32918050

Reason: This patch is not applicable to this specified target type - "rac_database"

==Following patches were SUCCESSFULLY applied:

Patch: /u01/sftwr/32895426/32904851

Log: /u01/app/oracle/product/19.0.0/dbhome_1/cfgtoollogs/opatchauto/core/opatch/opatch2021-12-23_14-57-21PM_1.log

Patch: /u01/sftwr/32895426/32916816

Log: /u01/app/oracle/product/19.0.0/dbhome_1/cfgtoollogs/opatchauto/core/opatch/opatch2021-12-23_14-57-21PM_1.log

OPatchauto session completed at Thu Dec 23 15:06:56 2021

Time taken to complete the session 13 minutes, 14 seconds

[root@rac1 ~]#

========================================================

(Approx. 14 Mins)

Completed in 14 Mins: RDBMS Upgrade Patch 32895426 on rac1 with root user before set $PATH

========================================================

[root@rac1 ~]# su

- oracle

Last login: Fri Oct 8 19:09:10 +03 2021 on pts/0

[oracle@rac1 ~]$ .

dell.env

[oracle@rac1 ~]$

$ORACLE_HOME/OPatch/opatch lsinventory | grep 32904851

$ORACLE_HOME/OPatch/opatch lsinventory | grep 32916816

$ORACLE_HOME/OPatch/opatch lsinventory | grep applied

pwd

[oracle@rac1 ~]$ $ORACLE_HOME/OPatch/opatch

lsinventory |grep description

Patch description: "Database Release Update : 19.12.0.0.210720 (32904851)"

Patch description: "OCW Release Update : 19.12.0.0.210720 (32916816)"

[oracle@rac1 ~]$

[oracle@rac1 ~]$ sqlplus

/ as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Fri Oct 8 19:12:53 2021

Version 19.12.0.0.0

Copyright (c) 1982, 2021, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.12.0.0.0

SQL> select

* from v$version;

BANNER

--------------------------------------------------------------------------------

BANNER_FULL

--------------------------------------------------------------------------------

BANNER_LEGACY

--------------------------------------------------------------------------------

CON_ID

----------

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.12.0.0.0

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

0

BANNER

--------------------------------------------------------------------------------

BANNER_FULL

--------------------------------------------------------------------------------

BANNER_LEGACY

--------------------------------------------------------------------------------

CON_ID

----------

SQL>

SQL> select

description from dba_registry_sqlpatch;

no rows selected

SQL>

#-- Repeat same steps for node 2

1. verify oui-patch.xml file has same permission on both nodes or not

as applied 19.18 with opatch individually on node then face issue reported in below know issues. file should have 664 permission on both nodes

ls -ltrh /u01/app/oraInventory/ContentsXML/oui-patch.xml

2. and then run datapatch from note README_32904851.html of database directory

AS per README_32895426.html

1.2.7.2 Loading Modified SQL Files into the Database

The following steps load modified SQL files into the database. For an Oracle RAC environment, perform these steps on only one node.

Datapatch is run to complete the post-install SQL deployment for the PSU

Steps Standalone DB

% sqlplus /nolog

SQL> Connect / as sysdba

SQL> startup

SQL> quit

cd $ORACLE_HOME/OPatch

./datapatch -verbose

SQL> select description from dba_registry_sqlpatch;

no rows selected

SQL>

SQL> select count(*) from all_objects where status='INVALID';

SQL> @?/rdbms/admin/utlrp.sql

Finally Stop and start GRID and Database with upgraded version you should not get any permission issue:

grid@rac1 :~$ crsctl stop crs

grid@rac1 :~$ crsctl start crs

grid@rac1 :~$ crsctl check crs

grid@rac1 :~$ crsctl check cluster -all

grid@rac1 :~$

grid@rac1 :~$ srvctl status database -d DELL

Best practice: Keep the backup of old binaries till one month.

STEP 8: Apply corresponding OJVM for the applied PSU

Release Schedule of Current Database Releases (Doc ID 742060.1)

Primary Note for Database Proactive Patch Program (Doc ID 888.1)

Database 11.2.0.4 Proactive Patch Information (Doc ID 2285559.1)

Database 12.1.0.2 Proactive Patch Information (Doc ID 2285558.1)

Database 18 Proactive Patch Information (Doc ID 2369376.1)

Oracle Database 19c Proactive Patch Information (Doc ID 2521164.1)

Oracle Database 21c Proactive Patch Information (Doc ID 2796590.1)

Oracle Recommended Patches -- "Oracle JavaVM Component Database PSU and Update" (OJVM PSU and OJVM Update) Patches (Doc ID 1929745.1)

SELECT version, status FROM dba_registry WHERE comp_id='JAVAVM';

Example for applying 19.18 OJVM is go to patch folder and use napply with out unzipping ojvm patch

Patching fails during relink , with error code 102 :: Fatal error: Command failed for target `javavm_refresh' (Doc ID 2002334.1)

[oracle@rac1 ~]$ cd /u02/19cSW/OJVM_19_ALL/OJVM_19_18

[oracle@rac1 OJVM_19_18]$ ls -ltrh

-rw-r--r--. 1 oracle oinstall 121M Mar 1 11:08 p34786990_190000_Linux-x86-64.zip

[oracle@rac1 OJVM_19_18]$ which perl

/usr/bin/perl

[oracle@rac1 OJVM_19_18]$ perl -v

This is perl 5, version 26, subversion 3 (v5.26.3) built for x86_64-linux-thread-multi

[oracle@rac1 OJVM_19_18]$ export PATH=$ORACLE_HOME/perl/bin:$PATH

[oracle@rac1 OJVM_19_18]$ $which perl

/u01/app/oracle/product/19.18.0/dbhome_1/perl/bin/perl

[oracle@rac1 OJVM_19_18]$ perl -v

This is perl 5, version 36, subversion 0 (v5.36.0) built for x86_64-linux-thread-multi

[oracle@rac1 OJVM_19_18]$ $ORACLE_HOME/OPatch/opatch napply -id 34786990

[oracle@rac1 ~]$

[oracle@rac1 ~]$ $ORACLE_HOME/OPatch/opatch lsinventory |grep description

ARU platform description:: Linux x86-64

Patch description: "OJVM RELEASE UPDATE: 19.18.0.0.230117 (34786990)"

Patch description: "OCW RELEASE UPDATE 19.18.0.0.0 (34768559)"

Patch description: "DATABASE RELEASE UPDATE : 19.18.0.0.230117 (REL-JAN230131) (34765931)"

[oracle@rac1 ~]$

Run Datapatch after applying OJVM to load latest sql's in database:

startup

[oracle@rac1 ~]$ time $ORACLE_HOME/OPatch/datapatch -verbose

set linesize 220 pagesize 200;

col ACTION_TIME for a35;

select PATCH_ID,ACTION,STATUS,ACTION_TIME,DESCRIPTION from dba_registry_sqlpatch;

################################################################################################################

################################################################################################################

COLD BACKUP Method of upgrade

If upgrading database like from 11.2.0.4 to 19C from Linux 6 to Linux 7 With EXPDP follow Oracle doc here Vedio link

################################################################################################################

- Run a fresh gather stats on database 3 quires before starting EXPDP

- stop listener to close any connected session

- stop start database (performance of exp changed from 9hr to 2hr)

- compare init parameter of old and upgraded database and modify accordingly

- Export Backup With following command

SQL> alter system switch logfile;

srvctl stop database -d $ORACLE_UNQNAME (to enhance performance & free-up SGA for expdp)

[oracle@rac1 ~]$ ps -ef |grep LOCAL=NO | awk '{print $2}' | xargs kill -9

[oracle/grid@rac1/rac2 ~]$ lsnrctl stop

[oracle@rac1 ~]$ ps -ef |grep -i LOCAL=NO|wc -l

1

[oracle@rac1 ~]$

echo 3 /proc/sys/vm/drop_caches && swapoff -a && swapon -a && printf '\n%s\n' ' Ram-cache and the swap get cleared'

-- Start the database in restricted mode. So, no other user able to connect:

SQL> startup restrict;

expdp "'/ as sysdba'" DIRECTORY=DATAPUMP DUMPFILE=metricereg2803.dmp LOGFILE=metricereg2803.log FULL=Y METRICS=Y EXCLUDE=STATISTICS compression=all logtime=all

- unzip 19.3 and then execute runInstaller

- apply required PSU ex:19.18 and OJVM Patches

$ORACLE_HOME/OPatch/opatch lsinventory | grep description

- create empty database with required NAME and CHARACTERSET on RAC Nodes and keep a flashback restore point to be safe side

- Create Required Tablespaces (note down TS which are unable to get created by impdp, and then create manually and retry impdp again)

- import Database with below command

- Run all basic quires to check database validation

select to_char(sysdate,'DD-MM-YYYY HH24:MI:SS') from dual;

show parameter sec_case_sensitive_logon

SELECT * from v$nls_parameters where parameter like '%CHARACTERSET%';

SELECT * FROM dba_directories where DIRECTORY_NAME='EXPDP';

create or replace directory expdp as '/u01/app/oracle/expdp';

SQL> select TABLESPACE_NAME from dba_tablespaces;

SQL> SELECT * FROM dba_temp_free_space;

SQL> select name from v$restore_point;

SQL> select comp_id,comp_name,schema,version,status from dba_registry;

SQL> select PATCH_ID,ACTION,STATUS,ACTION_TIME,DESCRIPTION from dba_registry_sqlpatch;

impdp system/sys DIRECTORY=expdp DUMPFILE=metricereg2803.dmp LOGFILE=metricereg2203_exp.log FULL=Y METRICS=Y logtime=all

- after import completes check invalid onjects and run @?/rdbms/admin/utlrp.sql once

- execute gather stats on database

- if it reported any APEX schema and enterprise command control shcema (SYSMAN) delete and re-install if required.

- Update Time zone of Database also.

#############################

Uninstall APEX from upgraded 19C Database which came from source

How to Uninstall Oracle HTML DB / Application Express from a Standard Database (Doc ID 558340.1)

- After impdp there are lot of invalid objects related to APEX and APEX schema is locked even after utlrp ran still it exists. So, we decided to uninstall and remove APEX from upgraded 19C database

select count(*) from all_objects where status like 'INVALID' and owner like 'APEX%'; --120

select count(*) from all_objects where status like 'INVALID' and owner like 'PUBLIC'; --4

select username,account_status from dba_users where username like '%APEX%';

select username,account_status from dba_users where username like 'FLOWS%';

USERNAME

--------------------------------------------------------------------------------

APEX_PUBLIC_USER

APEX_030200

FLOWS_FILES

SQL> drop user FLOWS_FILES cascade;

User dropped.

SQL> drop user APEX_PUBLIC_USER;

User dropped.

SQL> drop user APEX_030200 cascade;

User dropped.

SQL>

-------drop all synonyms given in below query (in CTS and EREG 199)

select 'drop public synonym ' || synonym_name || ';' from sys.dba_synonyms where table_owner in ('APEX_030200','FLOWS_FILES');

SQL> select 'drop public synonym ' || synonym_name || ';' from sys.dba_synonyms where table_owner in ('APEX_030200','FLOWS_FILES');

no rows selected

SQL>

SQL> select object_type||' '||object_name from dba_objects where owner='SYS' and object_name like 'WWV%';

no rows selected

SQL>

#############################

Uninstall OEM SYSMAN Schema which was configured in source database after impdp

How To Drop, Create And Recreate Database Control (dbconsole) Web Site in Releases 10g and 11g (Doc ID 278100.1)

EMCA fails with "SEVERE: Error starting Database Control" (Doc ID 757897.1)

12c Cloud Control Repository: How to Manually Drop Repository Database Objects in 12c Cloud Control (Doc ID 1395423.1)

- As Source Database was monitored in enterprise Manager it deployed agent on database under schema SYSMAN

If OEM is not using then you can drop SYSMAN objects and once sysman objects dropped it will be normal database. if you need sysman objects either you need to restore from previous back or you need to re install(fresh) the OEM again.

select sum(bytes)/1024/1024/1024 as size_in_giga, segment_type from dba_segments where owner='SYSMAN' group by segment_type;

DROP USER sysman CASCADE;

DROP ROLE mgmt_user;

DROP USER mgmt_view CASCADE;

DROP PUBLIC SYNONYM mgmt_target_blackouts;

DROP PUBLIC SYNONYM Setemviewusercontext;

select count(*) from tab;

select count(*) from tab;

-- DROP all reported sysman synonyms as per above (doc 1395423.1)

select 'drop '|| decode(owner,'PUBLIC',owner||' synonym '||synonym_name, ' synonym '||owner||'.'||synonym_name) ||';' from dba_synonyms where table_owner in ('SYSMAN', 'SYSMAN_MDS', 'MGMT_VIEW', 'SYSMAN_BIPLATFORM', 'SYSMAN_APM', 'SYSMAN_OPSS', 'SYSMAN_RO') ;

select owner,synonym_name from dba_synonyms where table_owner in ('SYSMAN','SYSMAN_MDS','MGMT_VIEW','SYSMAN_BIP','SYSMAN_APM','SYSMAN_OPSS','SYSMAN_RO');--Query should not return any rows.

select count(*) from all_objects where status like 'INVALID';---524 before utlrp

@?/rdbms/admin/utlrp.sql

select count(*) from all_objects where status like 'INVALID';--201 after utlrp

#############################

Upgrade Timezone of database after impdp

Multitenant - CDB/PDB - Upgrading DST using scripts - 12.2 and above - ( With Example Test Case - 19.11 ) (Doc ID 2794739.1)

select INST_ID,value from gv$diag_info where name='Diag Trace';

@?/rdbms/admin/utlusts.sql

Enter value for 1:

Oracle Database Release 19 Post-Upgrade Status Tool 03-30-2023 10:47:1

Database Name: XXXXX

Component Current Full Elapsed Time

Name Status Version HH:MM:SS

No status to report as upgrade was not performed.

Database time zone version is 32. It is older than current release time

zone version 40. Time zone upgrade is needed using the DBMS_DST package.

SQL> SELECT version FROM v$timezone_file;

VERSION

----------

32

SQL> select count(*) from SYS.WRI$_OPTSTAT_HISTGRM_HISTORY;

COUNT(*)

----------

159237

SQL> select count(*) from SYS.WRI$_OPTSTAT_HISTHEAD_HISTORY;

COUNT(*)

----------

247758

SQL>

SQL> alter system set cluster_database=false scope=spfile;

shut immediate (all rac nodes)

startup

- Prepare Window

cd $ORACLE_HOME/rdbms/admin

SQL>

SQL> @?/rdbms/admin/utltz_countstats.sql

SQL> @?/rdbms/admin/utltz_countstar.sql

exec dbms_scheduler.purge_log;

select count(*) from SYS.WRI$_OPTSTAT_HISTGRM_HISTORY; --159237

select count(*) from SYS.WRI$_OPTSTAT_HISTHEAD_HISTORY; ---247758

-- check the data retention period of the stats

-- the default value is 31

select systimestamp - dbms_stats.get_stats_history_availability from dual;

-- disable stats retention

exec dbms_stats.alter_stats_history_retention(0);

-- remove all the stats

exec DBMS_STATS.PURGE_STATS(systimestamp);

-- check the result of the purge operation

select count(*) from SYS.WRI$_OPTSTAT_HISTGRM_HISTORY; -- 0

select count(*) from SYS.WRI$_OPTSTAT_HISTHEAD_HISTORY; -- 0

exec dbms_stats.alter_stats_history_retention(31);

- Upgrade Window

spool utltz_upg_check.log

@utltz_upg_check.sql

spool off

---- Before execute apply script set cluster_database to false (else script will not execute)

spool utltz_upg_apply.log

@utltz_upg_apply.sql

spool off

SQL> SELECT version FROM v$timezone_file;

VERSION

----------

40

SQL> @?/rdbms/admin/utlusts.sql

Enter value for 1:

Oracle Database Release 19 Post-Upgrade Status Tool 03-28-2023 19:04:3

Database Name: NEREG

Component Current Full Elapsed Time

Name Status Version HH:MM:SS

No status to report as upgrade was not performed.

Database time zone version is 40. It meets current release needs.

SQL>

- compile invalid objects

select count(*) from all_objects where status like 'INVALID';---524 before utlrp

@?/rdbms/admin/utlrp.sql

select count(*) from all_objects where status like 'INVALID';--201 after utlrp

-- Restart database

SQL> alter system set cluster_database=true scope=spfile;

shut immediate (all rac nodes)

srvctl start database -d $ORACLE_UNQNAME

-- If required run gather stats before hand over

-- drop reported expdp jobs in database

SELECT 'DROP TABLE '||o.OWNER||'.'||o.OBJECT_NAME||' PURGE;'

FROM dba_objects o, dba_datapump_jobs j

WHERE o.owner=j.owner_name

AND o.object_name=j.job_name

AND j.state='NOT RUNNING';

#############################

disable case sensitive login of schemas if required (SOURCE)

SQL> col username for a20;

SQL> select username, password_versions from DBA_USERS where username='AREEF';

USERNAME PASSWORD_VERSIONS

-------------------- -----------------

AREEF 10G 11G

SQL>

SQL> conn areef/Areef

ERROR:

ORA-01017: invalid username/password; logon denied

Warning: You are no longer connected to ORACLE.

SQL> conn / as sysdba

Connected.

SQL> show parameter sec_case_sensitive_logon

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

sec_case_sensitive_logon boolean TRUE

SQL>

SQL>

SQL> alter system set sec_case_sensitive_logon=false;

System altered.

SQL>

SQL> show parameter sec_case_sensitive_logon

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

sec_case_sensitive_logon boolean FALSE

SQL> conn areef/Areef

Connected.

SQL> exit

[oracle@rac1 ~]$ cat $TNS_ADMIN/sqlnet.ora

NAMES.DIRECTORY_PATH=(TNSNAMES, ONAMES, HOSTNAME, EZCONNECT)

SQLNET.ALLOWED_LOGON_VERSION_SERVER=11

[oracle@rac1 ~]$

################################################################################################################

Known issues

Reported Issue #1. in applying 19.18 RDBMS PSU on database home

RDBMS opatch apply successfully competed on node1 and when applied same procedure on node2 it failed with below error when used with opatchauto apply.

Solution was to just change one give file permission to 664 and then rollback and reapplied the patch it got successful

[oracle@rac2 34765931]$ $ORACLE_HOME/OPatch/opatch apply

Patching component oracle.jdk, 1.8.0.201.0...

ApplySession failed in system modification phase... 'ApplySession::apply failed: java.io.IOException: oracle.sysman.oui.patch.PatchException: java.io.FileNotFoundException: /u01/app/oraInventory/ContentsXML/oui-patch.xml (Permission denied)'

Restoring "/u01/app/oracle/product/19.0.0.0/db_1" to the state prior to running NApply...

#### Stack trace of processes holding locks ####

Time: 2023-03-05_12-33-03PM

Command: oracle/opatch/OPatch apply -invPtrLoc /u01/app/oracle/product/19.0.0.0/db_1/oraInst.loc

Lock File Name: /u01/app/oraInventory/locks/_u01_app_oracle_product_19.0.0.0_db_1_writer.lock

StackTrace:

-----------

java.lang.Throwable

at oracle.sysman.oii.oiit.OiitLockHeartbeat.writeStackTrace(OiitLockHeartbeat.java:193)

at oracle.sysman.oii.oiit.OiitLockHeartbeat.<init>(OiitLockHeartbeat.java:173)

at oracle.sysman.oii.oiit.OiitTargetLocker.getWriterLock(OiitTargetLocker.java:346)

at oracle.sysman.oii.oiit.OiitTargetLocker.getWriterLock(OiitTargetLocker.java:238)

at oracle.sysman.oii.oiic.OiicStandardInventorySession.acquireLocks(OiicStandardInventorySession.java:564)

at oracle.sysman.oii.oiic.OiicStandardInventorySession.initAreaControl(OiicStandardInventorySession.java:359)

at oracle.sysman.oii.oiic.OiicStandardInventorySession.initSession(OiicStandardInventorySession.java:332)

at oracle.sysman.oii.oiic.OiicStandardInventorySession.initSession(OiicStandardInventorySession.java:294)

at oracle.sysman.oii.oiic.OiicStandardInventorySession.initSession(OiicStandardInventorySession.java:243)

at oracle.sysman.oui.patch.impl.HomeOperationsImpl.initialize(HomeOperationsImpl.java:107)

at oracle.glcm.opatch.common.api.install.HomeOperationsShell.initialize(HomeOperationsShell.java:117)

at oracle.opatch.ipm.IPMRWServices.addPatchCUP(IPMRWServices.java:134)

at oracle.opatch.ipm.IPMRWServices.add(IPMRWServices.java:146)

at oracle.opatch.ApplySession.apply(ApplySession.java:898)

at oracle.opatch.ApplySession.processLocal(ApplySession.java:4109)

at oracle.opatch.ApplySession.process(ApplySession.java:4979)

at oracle.opatch.ApplySession.process(ApplySession.java:4841)

at oracle.opatch.OPatchACL.processApply(OPatchACL.java:310)

at oracle.opatch.opatchutil.NApply.legacy_process(NApply.java:1415)

at oracle.opatch.opatchutil.NApply.legacy_process(NApply.java:373)

at oracle.opatch.opatchutil.NApply.process(NApply.java:353)

at oracle.opatch.opatchutil.OUSession.napply(OUSession.java:1137)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at oracle.opatch.UtilSession.process(UtilSession.java:355)

at oracle.opatch.OPatchSession.process(OPatchSession.java:2643)

at oracle.opatch.OPatch.process(OPatch.java:873)

at oracle.opatch.OPatch.main(OPatch.java:930)

------------------------------------

OPatch failed to restore OH '/u01/app/oracle/product/19.0.0.0/db_1'. Consult OPatch document to restore the home manually before proceeding.

NApply was not able to restore the home. Please invoke the following scripts:

- restore.[sh,bat]

- make.txt (Unix only)

to restore the ORACLE_HOME. They are located under

"/u01/app/oracle/product/19.0.0.0/db_1/.patch_storage/NApply/2023-03-05_12-33-03PM"

UtilSession failed: ApplySession failed in system modification phase... 'ApplySession::apply failed: java.io.IOException: oracle.sysman.oui.patch.PatchException: java.io.FileNotFoundException: /u01/app/oraInventory/ContentsXML/oui-patch.xml (Permission denied)'

Log file location: /u01/app/oracle/product/19.0.0.0/db_1/cfgtoollogs/opatch/opatch2023-03-05_12-33-03PM_1.log

OPatch failed with error code 73

[root@racdb1 ~]# ls -ltrh /u01/app/oraInventory/ContentsXML/oui-patch.xml

-rw-rw-r--. 1 grid oinstall 174 Mar 5 12:31 /u01/app/oraInventory/ContentsXML/oui-patch.xml

[root@racdb1 ~]#

[oracle@racdb2 bin]$ ls -ltrh /u01/app/oraInventory/ContentsXML/oui-patch.xml

-rw-r--r-- 1 grid oinstall 174 Mar 5 11:29 /u01/app/oraInventory/ContentsXML/oui-patch.xml

[root@racdb2 ~]# chmod 664 /u01/app/oraInventory/ContentsXML/oui-patch.xml

[root@racdb2 ~]# ls -ltrh /u01/app/oraInventory/ContentsXML/oui-patch.xml

-rw-rw-r-- 1 grid oinstall 174 Mar 5 11:29 /u01/app/oraInventory/ContentsXML/oui-patch.xml

--------------------------------- this problem will come while applying RDBMS PSU in node-2

[root@rac2 ~]# ls -ltrh /u01/app/oraInventory/ContentsXML/oui-patch.xml

-rw-r--r--. 1 grid oinstall 174 Mar 21 10:27 /u01/app/oraInventory/ContentsXML/oui-patch.xml

[root@rac2 ~]# chmod 664 /u01/app/oraInventory/ContentsXML/oui-patch.xml

[root@rac2 ~]# ls -ltrh /u01/app/oraInventory/ContentsXML/oui-patch.xml

-rw-rw-r--. 1 grid oinstall 174 Mar 21 10:27 /u01/app/oraInventory/ContentsXML/oui-patch.xml

[root@rac2 ~]#

$ORACLE_HOME/inventory/oneoffs/34765931 is corrupted.

[oracle@rac1 oneoffs]$ tar -czf 34765931.tar.gz 34765931

[oracle@rac2 oneoffs]$ tar -xzf 34765931.tar.gz

[oracle@rac2 34765931]$ $ORACLE_HOME/OPatch/opatch lsinventory | grep description

ARU platform description:: Linux x86-64

Patch description: "DATABASE RELEASE UPDATE : 19.18.0.0.230117 (REL-JAN230131) (34765931)"

Patch description: "OCW RELEASE UPDATE 19.3.0.0.0 (29585399)"

[oracle@rac2 34765931]$ $ORACLE_HOME/OPatch/opatch rollback -id 34765931

[oracle@rac2 34765931]$ $ORACLE_HOME/OPatch/opatch lsinventory | grep description

ARU platform description:: Linux x86-64

Patch description: "OCW RELEASE UPDATE 19.3.0.0.0 (29585399)"

Patch description: "Database Release Update : 19.3.0.0.190416 (29517242)"

[oracle@rac2 34765931]$

[oracle@rac2 34765931]$ $ORACLE_HOME/OPatch/opatch apply

--------------------------------- With the above steps patch got successfully applied in next retry.

Reported Issue #2. If getting error in alert log as show below then create new password file in ASM

Errors in file /u01/app/oracle/diag/rdbms/nereg/NEREG1/trace/NEREG1_ora_6244.trc:

ORA-17503: ksfdopn:2 Failed to open file +DATA/NEREG/PASSWORD/pwdnereg.316.1132231531

ORA-27300: OS system dependent operation:open failed with status: 13

ORA-27301: OS failure message: Permission denied

ORA-27302: failure occurred at: sskgmsmr_7

ORA-01017: invalid username/password; logon denied

2023-03-26T03:12:54.553193+03:00

Solution: (update password file)

srvctl config database -d $ORACLE_UNQNAME

orapwd file=+DATA/NEREG/PASSWORD/orapwNEREG password=59awestridge_1 dbuniquename='NEREG' entries=30 force=y

Reported Issue #3. ORA-28040: No matching authentication protocol exception

When Some users trying to connect Database from old version of TOAD they are facing this issue.

Solution: create sqlnet file add below parameter

vi $ORACLE_HOME/network/admin/sqlnet.ora

SQLNET.ALLOWED_LOGON_VERSION_SERVER=8